Do you know the distinctions between artificial intelligence (AI) and neural networks? Many people mix these two techs up. As you navigate the complex landscape of AI technologies, you may be confused and uncertain about what to use for your app.

Contents:

This article will unravel the differences between these two technologies clearly and concisely, helping you make informed decisions for your projects. Using the knowledge, you will empower your understanding of their applications in the digital world.

Let’s navigate the fascinating world of AI and neural networks together.

Neural network vs AI

What is AI?

Artificial Intelligence is a field that focuses on creating intelligent machines capable of performing tasks that typically require human intelligence, such as learning, problem-solving, perception, and decision-making.

The concept dates back to the 1950s, with early developments in symbolic AI and expert systems. Over the years, the technology has evolved significantly, thanks to advancements in computing power, algorithms, and data availability.

Types of Artificial Intelligence

- Symbolic AI: also known as classical or rule-based, it uses a set of rules and logic to represent knowledge and make decisions. The tech operates on predefined symbols and patterns and is particularly suited for rule-based reasoning and expert systems. However, it struggles with tasks that involve uncertainty or require learning from data.

- Machine Learning: this one is a subset that focuses on developing algorithms and models. They can learn from data and improve over time without explicit programming. This approach involves training models on labeled datasets to recognize patterns and make predictions. Machine learning algorithms include supervised learning, unsupervised learning, and reinforcement learning, among others.

- Deep Learning: it is a subfield of machine learning that uses neural networks with multiple layers to learn complex hierarchical representations from data. The networks are instrumental in deep learning, where they process and extract features from raw data to perform tasks like image recognition, speech recognition, natural language processing, and more. Deep learning technology excels in tasks that involve large amounts of data and require high levels of accuracy and abstraction.

Hold on, we mentioned neural networks in the AI types. That’s suspicious. And you’ve probably guessed a bit of the difference already. However, let’s continue to sort everything out.

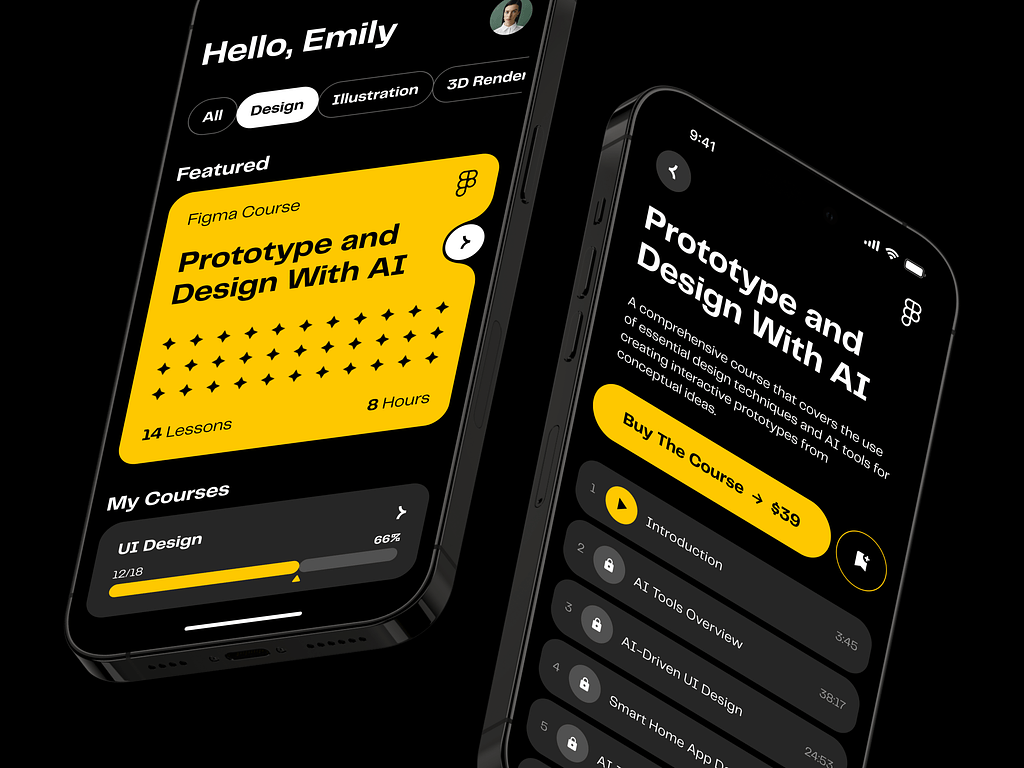

AI E-learning Mobile App by Shakuro

What are neural networks?

They are a specific type of algorithm inspired by the structure and function of the human brain. They consist of interconnected nodes (neurons) arranged in layers, with each one connected to others through weighted connections.

Just as the brain processes information through signals transmitted between neurons, neural networks perform computations by processing input data through interconnected nodes. They demonstrate the ability to learn, adapt, and similarly make decisions. The adaptation process involves adjusting the weights of these connections based on input data during training.

Types of neural networks

- Convolutional Neural Networks (CNNs) are commonly used for image recognition and computer vision tasks, as they are effective at detecting patterns and features in visual data.

- Recurrent Neural Networks (RNNs) are suitable for processing sequential data, such as natural language processing tasks and time-series predictions.

Other types include Long Short-Term Memory (LSTM) networks, Generative Adversarial Networks (GANs), and more, each with its unique architecture and capabilities.

Key differences between AI and neural networks

Now, let’s sum up the distinctions:

Artificial Intelligence encompasses a wide range of technologies and methodologies aimed at simulating human intelligence in machines. It involves the development of algorithms and systems that can perform tasks such as decision-making, problem-solving, and natural language processing.

Neural networks, on the other hand, are a specific type of AI technology inspired by the structure and function of the human brain, they can train and adapt. While they play a crucial role in AI app development, the field is not limited to neural networks and includes other techniques such as expert systems, genetic algorithms, and reinforcement learning.

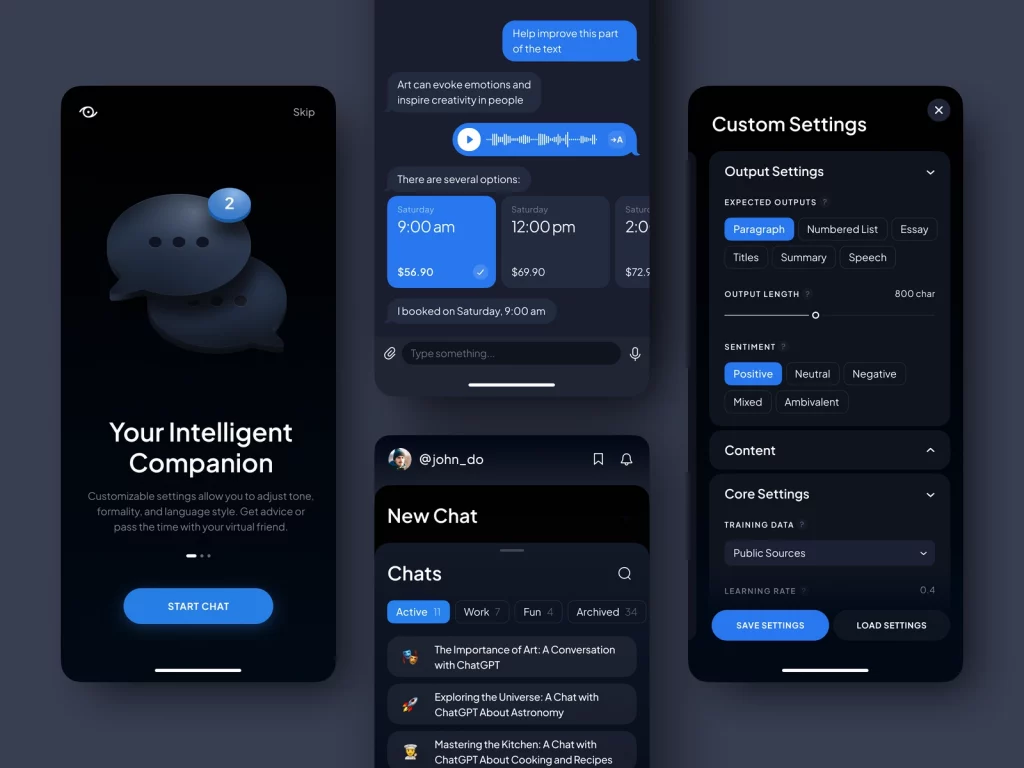

AI Prompt Assistant by Conceptzilla

Artificial Intelligence applications

Both technologies are being increasingly used across various industries to revolutionize processes, improve efficiency, and drive innovation. Here are some examples:

Healthcare:

- Diagnostics: you can use smart algorithms for medical imaging analysis automatically, enabling the early detection of diseases like cancer or identifying abnormalities in X-rays and MRI scans.

- Personalized medicine: analyze patient data to recommend personalized treatment plans and medication dosages based on individual health profiles, genetic information, and medical history.

- Drug discovery: with neural networks, you help pharmaceutical companies accelerate drug discovery processes by predicting potential drug interactions, identifying drug targets, and analyzing molecular structures.

Finance:

- Fraud detection: detect fraudulent activities in real-time by analyzing transactional data, picking up unusual patterns, and flagging suspicious transactions for further investigation.

- Algorithmic trading: use AI to analyze market trends, predict stock prices, and execute trades at optimal times, resulting in improved trading strategies and higher returns.

- Risk assessment: risk assessment models leverage neural networks to analyze credit scores, market data, and other variables to assess credit risk, evaluate loan applications, and make informed lending decisions.

Autonomous vehicles:

- Computer vision: leverage smart tech in autonomous vehicles for image and object recognition, so cars can detect obstacles, pedestrians, traffic signs, and lane markings for safe navigation.

- Sensor fusion: AI algorithms integrate data from various sensors, such as radar, lidar, and cameras, using neural networks to enhance perception accuracy, predict trajectories, and improve decision-making for autonomous driving.

- Path planning: they also generate optimal routes, anticipate traffic conditions, and make real-time decisions to navigate autonomous vehicles safely and efficiently in complex urban environments.

Cybersecurity:

- Threat detection: cybersecurity systems take advantage of Artificial Intelligence to detect and respond to threats by analyzing network traffic, monitoring user behavior, and identifying anomalous activities.

- Malware detection: neural networks are used to analyze patterns in code, URLs, and files to identify and mitigate malware attacks, phishing scams, and other malicious activities.

- Vulnerability assessment: AI-based tools assess cybersecurity vulnerabilities, prioritize security patches, and enhance network defenses by using neural networks for predictive analysis, threat modeling, and risk mitigation strategies.

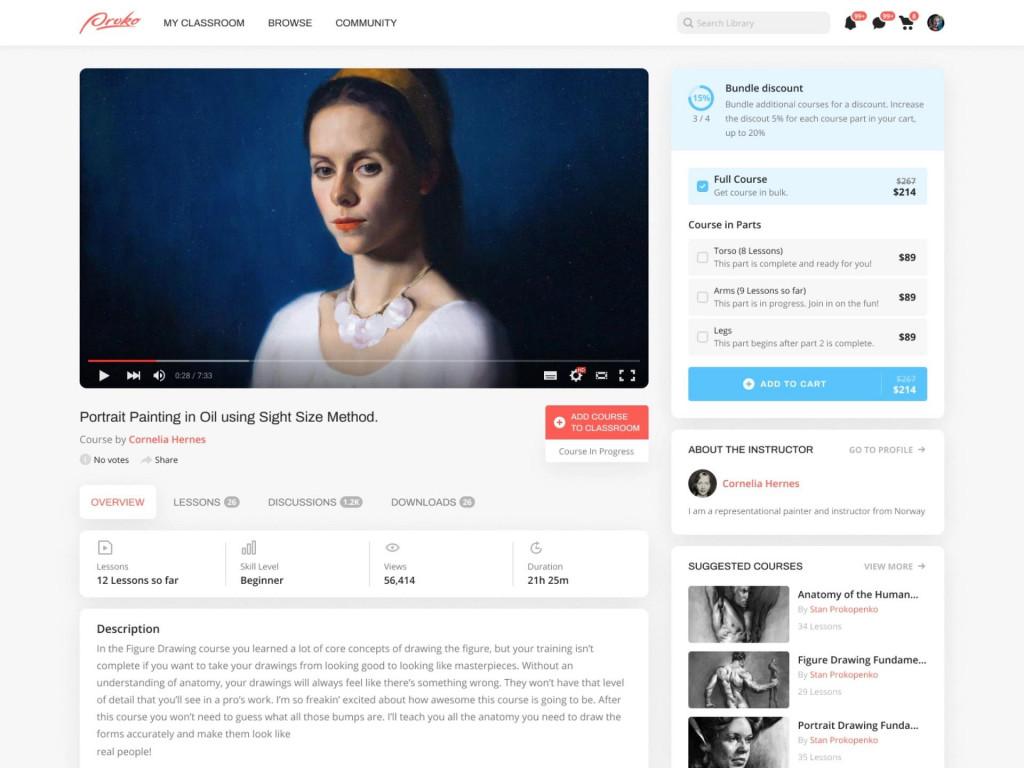

We used AI in one of our recent e-learning collaborations with Proko. We implemented complex algorithms for data analytics and reports. The students can get basic feedback without interacting with human instructors, and recommendations for other courses based on their interests.

Future trends in AI and neural networks

Some of the noteworthy trends include reinforcement learning, generative adversarial networks (GANs), etc.

Here’s an overview of these trends and their potential impact on the future:

Reinforcement learning

It is a machine learning algorithm where you train the software to make decisions by interacting with its environment and receiving feedback or rewards based on its actions. This approach replicates the trial-and-error learning process that humans follow to achieve their objectives. The machines perform complex tasks and make decisions autonomously. That’s why reinforcement learning has been instrumental in areas such as robotics, gaming, recommendation systems, and autonomous agents.

Generative Adversarial Networks (GANs)

GANs are neural networks that learn to generate synthetic data, images, or content that closely resemble real data by training two competing networks: a generator and a discriminator. The generator creates new data samples, while the discriminator evaluates the authenticity of the generated samples. GANs have been used for image generation, style transfer, data augmentation, and other creative applications.

Explainable AI

This one focuses on developing machine learning algorithms that provide transparent explanations for their decisions and predictions. It aims to enhance the trust, accountability, and understanding of Artificial Intelligence systems by enabling users to comprehend the reasoning behind generated outcomes.

These technologies have the potential to reshape industries, drive innovation, and improve quality of life through applications in healthcare, education, sustainability, and other societal challenges. However, you should still consider the ethical side related to privacy, security, bias, and accountability to ensure responsible and beneficial deployment in society.

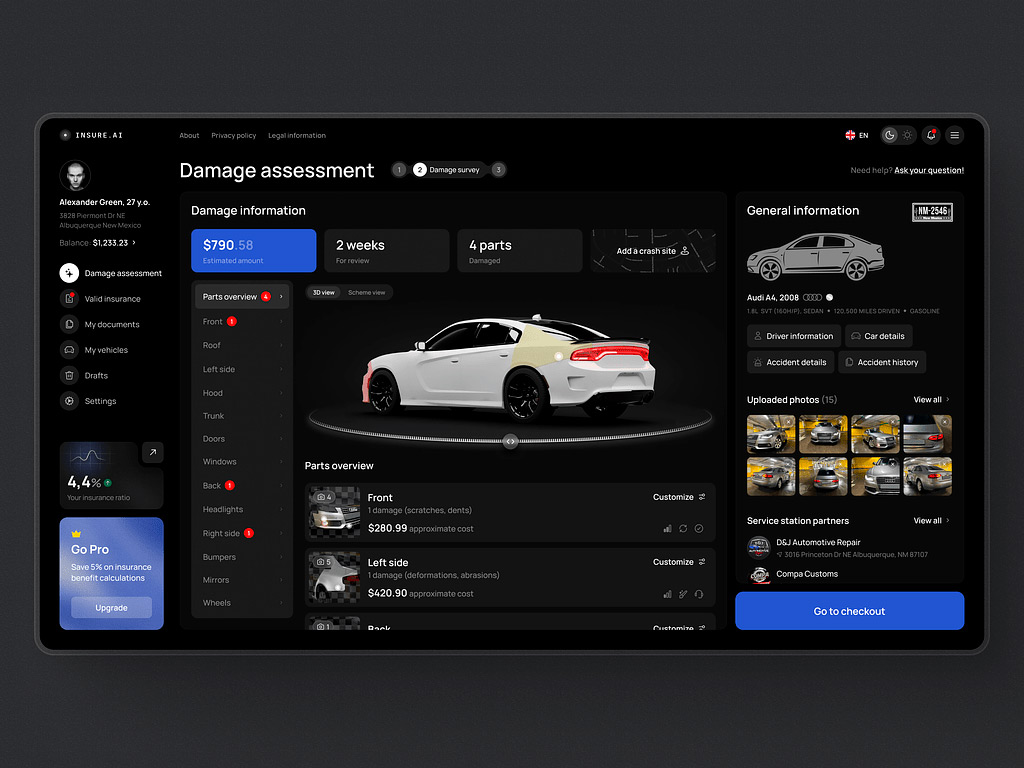

AI Insurance Web Design Concept by Shakuro

AI vs neural network: choose your character

The distinctions lie in the scope and functionality. The first one encompasses a broader concept of simulating human intelligence in machines, while the second one is a subset that mimics the interconnected structure of the human brain to process information.

When comparing Artificial Intelligence vs neural networks, it is important to consider the complexity of the task at hand. AI, with its diverse range of applications and problem-solving capabilities, is ideal for tasks requiring general intelligence and adaptive learning.

On the other hand, neural networks, with their ability to process massive amounts of data and detect patterns, are best suited for tasks that involve pattern recognition, image or speech processing, and predictive analytics.

Do you have a project based on these technologies? Contact us and together we will build a cutting-edge app that actually solves users’ problems.