You’ve had that feeling when you pour your heart out on something designing, then later find it just doesn’t connect with users? Grrr, I know. But! You can gain an amazing superpower known as usability testing when you peek behind the scenes at how actual humans behave around what you create.

Contents:

Let’s face it: no one designs perfect interfaces the first time (or even the fifth). That’s where usability testing is useful. It can feel intimidating at first. All those methods, tools, and buzzwords out there will make your head spin. Trust me, I was there recently. But after you start doing it, it all falls into place. And also, it really does take a lot of the guesswork out of design choices. Just consider this—if you don’t test, you’re essentially flying blind. Do you do that? No way. By the way, have you ever had to fight with an app or a website and think to yourself, “Why did they never fix that?” Well, odds are, they did not test right.

From personal experience (I ran around usability research for years), I can assure you that learning different approaches has changed my life as far as working on projects is concerned. As if you have a pair of spectacles and see everything clearly now. Some methods are quick and easy, others require some work but pay more dividends. The secret sauce is in finding the right balance for your project. Stick with me, and we’ll learn some easy-to-start usability testing methods together.

Introduction to Usability Testing

What is Usability Testing?

Core Definition and Objectives

It’s a way of testing whether it’s easy for humans to work with a product, like an application or site. It’s more about observing actual people and how they can get what they want with as little trouble and quickly as possible. You look for problems and confusing points so you can work on those ahead of time.

The main objectives of usability tests are:

- Find out problems: Find out what is hard or confusing for users.

- Improve user experience: Make and make the product fun to use.

- Validate design decisions: Determine if your design is well-suited for your target audience.

- Get feedback: Listen to it directly from users for their experience.

Think of it like this: If you’re baking a cake, usability testing is like tasting it before serving to make sure it tastes good and isn’t too sweet or bitter!

There is a popular approach called the 5 E’s: ease of use, efficiency, engagement, error prevention, and enjoyment. These five qualities are set to contribute to positive UX. It’s not obligatory but good to keep in mind.

Historical Background and Development

Usability testing methods all started back in the rise of the technological world (1940s-1970s) when computers were becoming a common thing. Experts realized that just because something was technically correct, it wouldn’t be simple enough for regular folk to use. In this case, usability was all about being efficient and safe. The aim was to make tools work more with humans and less with machines.

Computers became prevalent in businesses and universities by the 1970s. However, they were still complicated to use for anyone but professionals. Companies like Xerox PARC and Apple began to set up usability laboratories for product testing from the late 1970s to the early 1980s. These labs utilized cameras, one-way mirrors, and observational techniques for observing users operating software and hardware. Donald Norman, a cognitive scientist, brought the phrase “user-centered design” into popular usage in the 1980s. He was convinced that products needed to be designed around actual users’ needs and behavior patterns rather than engineers’ conjectures.

When the internet expanded in the 1990s, websites were a top focus for usability testing. Companies found that if navigating a website was hard, people would leave and go to a different website. This era also saw the advent of new tools, including heatmaps (to track where users clicked) and A/B testing (comparing two designs). With broadband internet, remote testing was now possible. Instead of taking users into a lab environment, people could observe them using their own hardware at home.

During the 2000s, smartphones changed the way people engaged with technology. The designs needed to accommodate smaller screens, touch screens, and varying interaction patterns. At the same time, Agile methodologies became popular in software development. These practices focus on frequent iterations and feedback loops, and UX testing naturally fits into these.

Now, though, usability research makes accessibility and inclusion the top priorities. Designers have to produce things for all people, regardless of age, ability, or origin. Voice command and screen reader features are now the new standard to be held to.

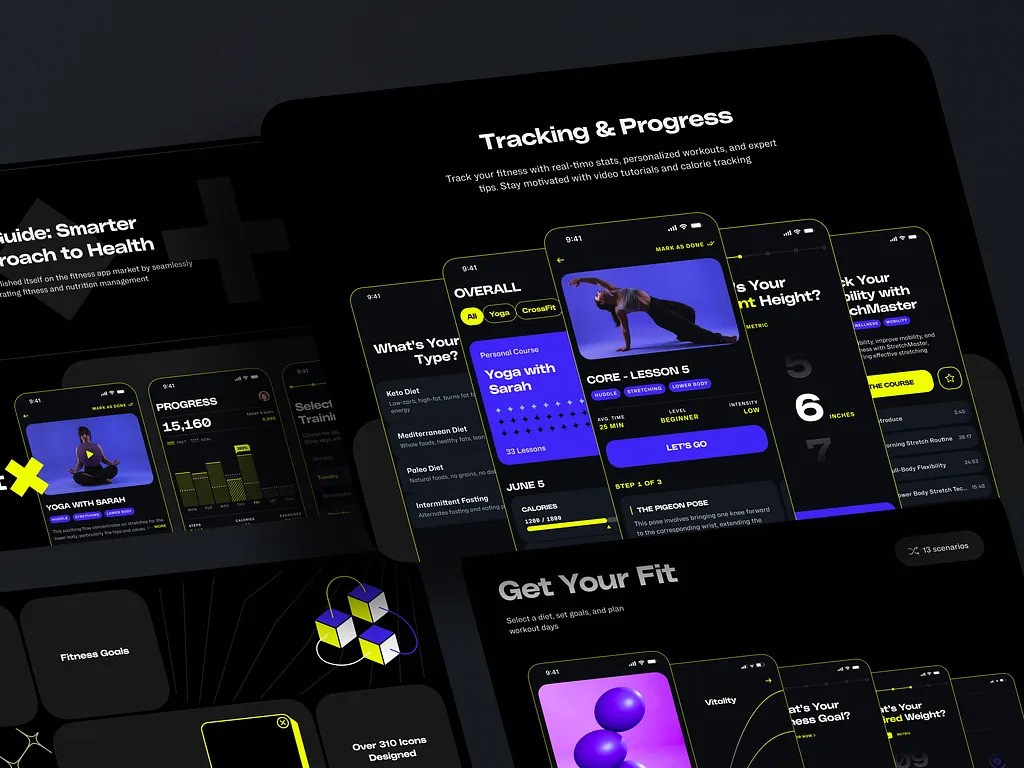

Web Design for a Yoga & Wellness Platform by Shakuro

Why Usability Testing Matters

It is more than just staging an experiment—it’s a critical investment that directly impacts your product’s success: user retention, conversion rates, return on investment (ROI), and others.

Impact on User Retention

Retention means whether users keep coming back to your product over time. If they find your product difficult, they abandon it for something better. You can make your app or website astonishingly beautiful and hype it all over the internet, but if it’s frustrating to use, people will give it up no matter what. Truth hits hard, huh?

In what ways do you influence retention while performing usability tests? Well, here are three ways:

- Identify pain points: You reveal issues that might drive users away, such as slow loading times, confusing navigation, or unclear instructions.

- Improve satisfaction: You create smoother, more enjoyable interactions helping people achieve their goals and thus please them.

- Build trust: When users feel confident using your product, they’re more likely to stick with it long-term.

Example:

An e-commerce app where clients struggle to add items to their cart because the button is hidden. Without usability testing, this issue might remain unnoticed until it’s too late, and people will rage quit, buying elsewhere. No gain just pain.

Changes in Conversion

When measuring conversion, you calculate how many users take the action you want them to make: placing an order, signing up for a service, completing a task, etc.

With usability research, you can find alternative ways to grow numbers:

- Lift the curtain: Uncover obstacles that prevent people from converting. For example, clients abandon their carts because of a complicated checkout process. If you remove this pitfall, you will get more checkouts.

- Optimize design: You make the people’s paths simpler with UX optimizations. The easier it gets, the more actions are completed.

- Gain respect: When people see a clear path, they put more trust in it. The same goes for simple layouts—seeing no threats around the corner, users are more likely to buy or subscribe.

Example: A confusing sign-up form on your website can cost you potential customers. They are lost in certain fields or don’t understand the terms of service. Simplifying the form and clarifying language spikes conversion rates.

ROI of Investing in Usability

The formula here is simple: you measure the financial benefit of investing in usability testing compared to the cost of conducting it.

Financial benefits? We’re talking about design here, are we not? Indeed, but the testing has a direct influence on costs:

- Reduces development expenses: You have assembled a wardrobe but found extra screws. See the problem of finding and inserting those screws in place now that everything’s done? Same here: catching and amending inconveniences early saves money since you avoid costly redesigns later.

- Increases revenue: Higher user engagement, more sales, and greater customer satisfaction—all contribute to revenue growth.

- Lower support costs: When the product works like a clock, the need for customer support is much lower. Thus you save not only your brand image but the budget as well.

- Brand reputation: Ease of use and friendliness empower your brand image and attract more loyal customers.

Example:

Let’s assume that you are investing $5,000 in usability research for a new app. You find one such show-stopper issue which was causing folks to uninstall the app after their initial use. After releasing the updated version, your active users increase by 20% and sign-ups rise by 15% for the subscriptions. Your revenue boost rakes in a whole lot more than it will cost, for which you see a positive return on investment.

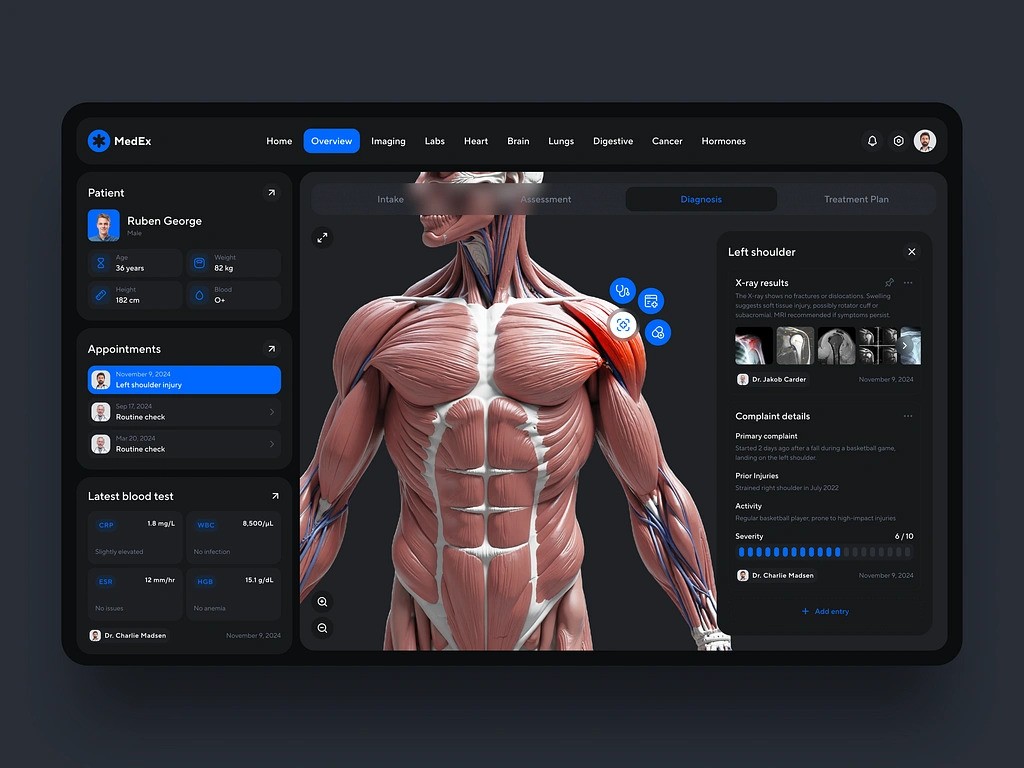

Medical Management Software Dashboard by Shakuro

Foundational Elements of Usability Testing

Key Components

Role of the Facilitator

It’s the one who does the usability tests and interacts with participants. The key is guiding the session while keeping the results unbiased and meaningful.

There are other responsibilities as well:

- Set the stage: Clarify the test purpose and calm the participants. They should understand that you’re testing the product, not their skill or tastes.

- Navigate through tasks: Give as clear and concise instructions as possible for each assignment without giving any hints.

- Inspire think-aloud: Ask participants to talk about their thoughts and actions during interactions. Their decision-making process will be a scrying mirror into your product’s problems.

- Observe and take notes: Keep an eye on how users behave, noting any difficulties or unexpected actions.

- Stay unbiased: Avoid misleading participants with your words or body language. For example, don’t say “good job” after every action—this might skew their behavior.

Sounds hard at some points, huh? When I was conducting UX interviews, keeping my let-me-help-you-real-quick-person was the most difficult task. I had to bite my tongue literally. Well, there are other tips I can give you:

- Be gentle: Especially with tech-illiterate or elderly people. Give them enough time to think and act as if they are playing with your app at home.

- Ask open-ended questions: Instead of asking yes/no questions, ask for explanations (e.g., “Why did you choose that option?”).

- Handle mistakes gracefully: If a person makes a mistake, don’t correct them immediately. Let them figure it out unless it derails the test.

Designing Effective Tasks

Despite striving for natural conditions, your usability research should have a clear structure and special tasks: specific activities participants perform during testing. The tasks should simulate real-world use cases and help you evaluate whether users can accomplish key goals with your product.

From my experience, the tasks should be:

- Realistic: Reflect user scenarios that can really happen in real life rather than artificial or overly simplified situations.

- Specific but flexible: Be clear about what you want users to do, but give some room for exploration. For example, instead of saying “Click the ‘Add to Cart’ button,” say “Find a product and add it to your cart.”

- Measurable: Set clear success criteria so you can objectively evaluate results.

- Balanced: Include a mix of easy and challenging tasks to get a full picture.

Easier said than done. How to adhere to these characteristics and stay objective? Start from the core—your users. What are the most important things they need to do with your product? Their goals should form the task basis. Instead of spreading your efforts thin, focus on high-priority tasks aligned with the objectives at hand.

To avoid any misunderstandings, write tasks in simple language, omitting any technical jargon or complex terms. Try the scenarios yourself and ask colleagues for trials to smooth any sharp corners.

Participant Selection Criteria

Selecting the right chunk of target audience for usability tests is another pillar of success for your venture. Without relevant and actionable feedback, you quickly trail off the path.

Obviously, match participants to your target audience based on age, gender, location, income level, etc. Picking users from other parts won’t do you any good. Invite people who WILL use your product in real life. How to get these criteria? From a market research or personas review.

At the same time, users should have a different skill level. Yes, the tasks should be balanced, but you need to see how experience level affects usability. Both novices and experts should be able to complete an action. Also, don’t hesitate to invite people with disabilities especially if your audience includes users aged 50 and above. Problems like bad eyesight for instance can highlight more UX issues than a regular run.

Finally, round the number of participants to 5–10. This usually uncovers most major usability issues. However, if your product is complex or too niche, you might need a larger sample size.

Rental App Design Concept by Shakuro

Ethical Considerations

When working closely with other people, their safety, comfort, and trust are your top priority. During usability tests, you will deal with sensitive personal information, which you also protect from leaking. Below I will tell you about a few things you should pay close attention to.

Informed Consent

Whether people come to your test voluntarily or on paid terms, they have to sign an informed consent. It is a fundamental ethical principle that proves participants are fully aware of what they’re agreeing to before taking part in the study.

You must inform them about:

- The purpose of the test.

- Tasks they will perform.

- How their data will be collected, used, and stored.

- Their rights as participants, including the ability to withdraw at any time without penalty.

Why does it matter? Apart from legal regulations like GDPR in Europe, transparency adds trust to your communication. Clear terms of participation make usability testing more comfortable.

The consent form usually includes the purpose of the test, duration and format, risks and benefits, recording, data handling, and contacts. Before signing it, people should have some time to make up their minds. And even if they subscribe, give them the possibility to withdraw from the study at any point.

Privacy

Participant privacy is a sensitive yet crucial matter for maintaining trust. People are more eager to go through the test if they feel safe and respected.

Also, you have to protect anonymity according to legal regulations like GDPR or CCPA as I mentioned before. Your company’s reputation is at stake here.

Instead of guarding the info day and night, you can remove identifying details like names, addresses, and contact information from recordings, notes, and reports. Pseudonyms will do fine as well. Leave only non-sensitive data necessary for the research. Alternatively, you can store it in an encrypted form on secure servers or a cloud with limited access.

If people don’t want a piece of their info disclosed, let them choose what they want to submit. For instance, if a person wants to take remote usability tests without a camera or microphone, you should indulge their desire to do so.

Handling Sensitive Data

However, you take a step further in secrecy when dealing with health or financial info. It’s a usual case when testing wellness or banking apps. Extra care is a must to handle this type of data.

I admit, I often got my hands cold as ice during these cases. But no worries, here is what you can do for an additional level of protection.

- No random collecting: Gather data that are absolutely necessary for the study.

- Encrypt info: Use strong encryption methods and passwords during sharing and storing.

- Limit access: Restrict access to sensitive data to those directly involved in the study.

- Obtain explicit consent: Clarify why sensitive data is needed and how it will be used. Get additional written consent before proceeding.

- Follow legal guidelines: Browse HIPAA (healthcare data) or PCI-DSS (payment card information) to find correct procedures.

- Dispose securely: Destroy sensitive data by shredding paper documents or wiping digital files. Make sure you do not leave any traces on test devices.

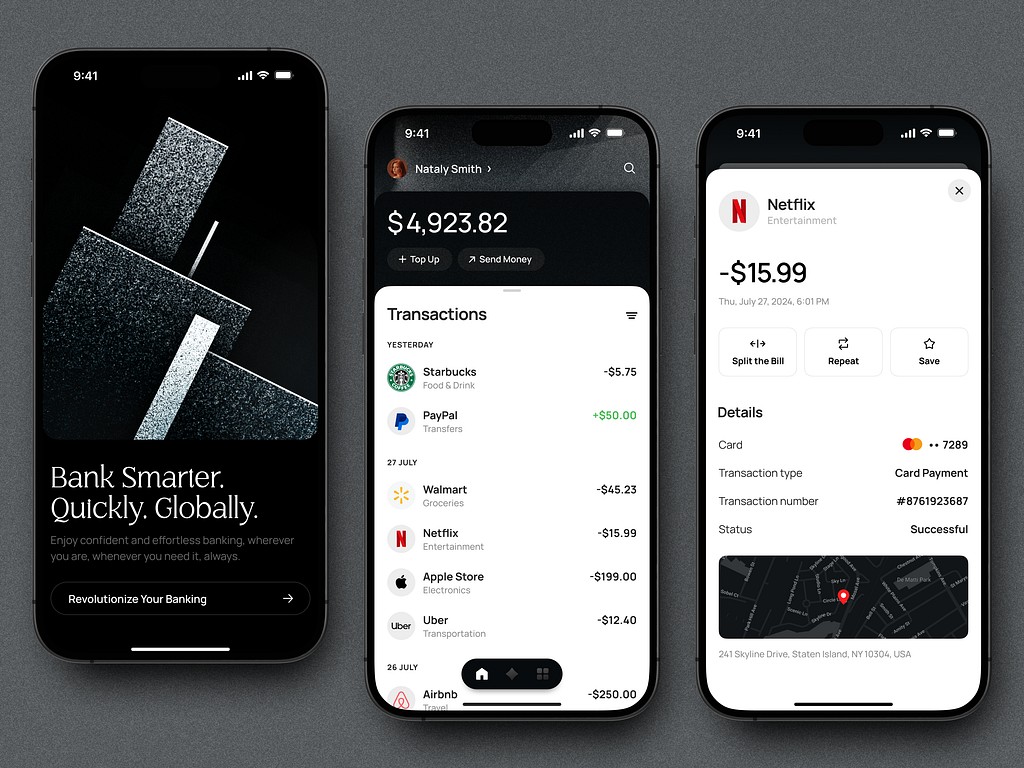

I’ll give you a real-life example from my work experience. Back in the day, I had to do UX testing for a banking app. Instead of making a person input their personal details like name or whatever, they input fictional data I prepared earlier (or what they made up on the go). So it couldn’t be traced back to any real accounts. Still, I confirmed with people that their info wouldn’t be used in the test, so they avoided putting it accidentally.

Types of Usability Testing

There are three main types of usability testing methods based on interaction style, location, and data form:

- Moderated vs unmoderated

- Remote vs in-person

- Qualitative vs quantitative

Each of them splits into several subtypes as well.

| By interaction style | By location | By data type | |||

| Moderated | Unmoderated | Remote | In-person | Qualitative | Quantitative |

| Conducted with a facilitator who supervises the session. For example, lab or guerilla testing. | No facilitator. Users solve tasks on their own. For example, surveys, polls, eye-tracking. | Participants take tests from other locations, usually, at home. Session recordings, for instance. | People come to your office and solve tasks in special conditions. Lab testing belongs here too. | It’s users’ feedback, why they behave this or that way. For instance, post-test interviews. | Track improvements over time with metrics like time-on-task and error rate. |

By Interaction Style

Moderated

You guide participants through the test in real time, taking notes on their behavior and asking questions.

Lab usability testing

Often referred to as a “usability lab”, it happens in a controlled environment. You invite people to a lab or office where they interact with your product while being observed. Cameras, one-way mirrors, and recording equipment capture user actions and facial expressions.

While looking more like a lab experiment by description, the usability testing method minimizes distractions, so participants focus solely on the task at hand. You observe users closely, including body language and facial reactions. At the same time, you guide participants, ask follow-up questions, and clarify tasks in real time.

Guerilla testing

This one is also moderated, but instead of a controlled environment, it’s done in public spaces like streets, cafes, etc. You approach and ask random people to test your product for a few minutes focusing on specific features.

It’s an informal, quick, and cost-effective way to gather immediate feedback. You don’t have to set up cameras or screen recording software, prepare office rooms, etc.: the process flows naturally, just like in real life.

When do you have to present at the usability testing and keep an eye on everything?

- You need extremely detailed insights into user behavior and their thought processes. What do they look like when they tap the inactive Purchase button fifty times hoping it will work? That stubborn frustration is admirable.

- Your product has complex workflows and you need a way to draw the veil from certain actions to make the users’ lives easier. How do the users even open that window? The whole team still doesn’t know.

- You have to present a detailed rapport with participants and encourage open feedback.

- The product is an early-stage prototype or you’re exploring new concepts that need feedback right now.

Humor aside, moderation can truly highlight some issues that remain unnoticed under other conditions. For example, a person may visibly hesitate before clicking “Proceed” on an e-commerce site, and you need to know why to increase sales.

Unmoderated

As the name suggests, users here complete tasks on their own, at home or work, without you looking over their shoulder. While participants interact with your product, automated recording tools capture their actions and feedback. Then, you analyze the recordings.

Observation

Through indirect means, such as heatmaps, click tracking and funnel analysis, you get the user’s opinion. It’s not just looking through the recordings or heatmaps. You can understand which parts of your product receive the most attention and which fall off too early. Like, people struggle to see a button since they expect it to be in the header. Based on this research data, you can optimize the layout and add crucial elements to high-engagement areas. Friction gets reduced, and sales go up.

Eye-tracking

You use special software with cameras or sensors to track the user’s eye movements. They turn into visual attention patterns like heatmaps or gaze plots, showing areas of focus and neglect. They help you assess how users scan pages for info and rearrange it to highlight key elements ( CTAs, headlines, etc.). For example, there are two popular F and Z patterns for visual hierarchy on text-rich and content-rich pages.

Surveys and feedback polls

It’s one of the simplest and quickest ways to collect qualitative and quantitative data directly from participants. You create a poll with open-ended questions like “What did you find confusing?” or closed questions such as rating scales from 1 to 10. Then people answer them using their experience.

In these types of usability testing, you gather subjective opinions, access task success rates, and validate improvements after making changes.

Automated task-based testing

You send users pre-defined to complete on their own, where tools record their performance like task completion rates, time-on-task, and error rates. As an example, you can ask them to find a product, add it to the cart, and check if they see any discount badges. Or what they think this discount applies to.

With this usability research, you confirm how easily users can complete specific actions such as I described above. Also, you can conduct A/B testing to see what design version works better. And, certainly, find some common pain points down the road.

When does unmoderated testing outshine other ways?

- You need quick feedback right here, right now. There is a tight timeline, and you can’t wait for insights to ripe.

- You test a large sample size and require easy test scaling up to thousands of participants.

- The target audience spreads across different locations, and you can’t invite them all to the office.

- You test simple tasks that don’t require your extensive guidance, and people can complete them on their own.

- You have to get quantitative data such as task success rates, time-on-task, and error rates.

- You need real-world behavior and natural conditions.

ZAD app by Shakuro

By Location

Remote

You conduct testing online, where participants join you from anywhere on their own devices. This way, you get a wider pool of participants saving money and time because you don’t have to travel. At the same time, users try out the product in a comfortable environment.

Still, since it’s remote, you won’t be able to see body language or non-verbal cues through a camera. And especially if there isn’t any camera, just tracking software. What really triggered me during remote testing were poor internet connection and poor performance of the user’s device. They can really impact the testing results but you can’t predict it or do much about it.

In-Person

These usability testing methods are the same as the lab or offline testing, where people come to a physical location. You keep an eye on participants, including their facial expressions and body language. Thus you have more control and can eliminate any distractions. If you come across any technical issues, you can quickly troubleshoot them on the go, unlike in the remote sessions.

Here is where in-person testing falls off: it’s limited to the local area. You can’t be physically in several locations at the same time, unless you are a new Copperfield, of course. Also, this type is more expensive, because of logistics.

By Data Type

Qualitative Insights (User Feedback)

Understanding why users behave the way they do is your primary focus for these usability testing methods. Rich, descriptive information about their experiences uncovers hidden issues that quantitative metrics alone miss. For instance, a participant says, “I can’t find the search bar because it blends into the background.”. They reveal a design flaw you haven’t seen from your point of perspective. When you think there is little logic, post-test interviews explain a lot about their reasoning. This info helps you identify usability problems and generate more ideas for improvement.

Quantitative Metrics (Success Rates, Time-on-Task)

You measure statistics that represent how well users deal with some specific tasks.

Let’s look at the common quantitative metrics to understand the subject at hand:

- Task success rate: It’s the percentage of users who complete a task successfully.

- Time-on-task: Average time it takes people to complete a task.

- Error rate: Reflects how many mistakes users make while performing tasks.

- System usability scale (SUS): A standardized questionnaire to access overall usability.

With these metrics, you objectively evaluate usability and track improvements over time. When you have different designs or product versions, it’s a great way to compare them. For instance, you discover that 80% of users successfully add an item to their cart within 30 seconds, but only 50% complete the checkout process. The metrics pinpoint an issue in the checkout workflow.

Specialized Approaches

They touch on specific aspects such as information architecture or navigation structure. I’ll dwell upon two common types of usability testing—card sorting and tree testing.

Card sorting

You give users a set of cards, each labeled with a piece of content or functionality. They need to group the cards into categories that make sense to them. After the session, you check the ways people organize information to understand what product structure is preferable. The approach is quite handy when you need to design an intuitive navigation system familiar to users.

Card sorting branches into two types: open and closed. In the first one, the participants make their own categories, while in the latter the categories are pre-defined.

The early design stages when you have few ideas on information architecture are the perfect time for card sorting. Double-checking existing structures before launching a redesign also works.

Tree testing

Instead of cards, you present the users with a “tree”—a text-based representation of your product’s structure. They need to locate specific features in the tree. Judging their results, you see how easy it is for participants to find something and whether the structure is in dire need of modification.

It’s a wise move to do some tree testing for navigation clarity and logic before designing wireframes. The cards also help you compare different information architectures and choose the best option.

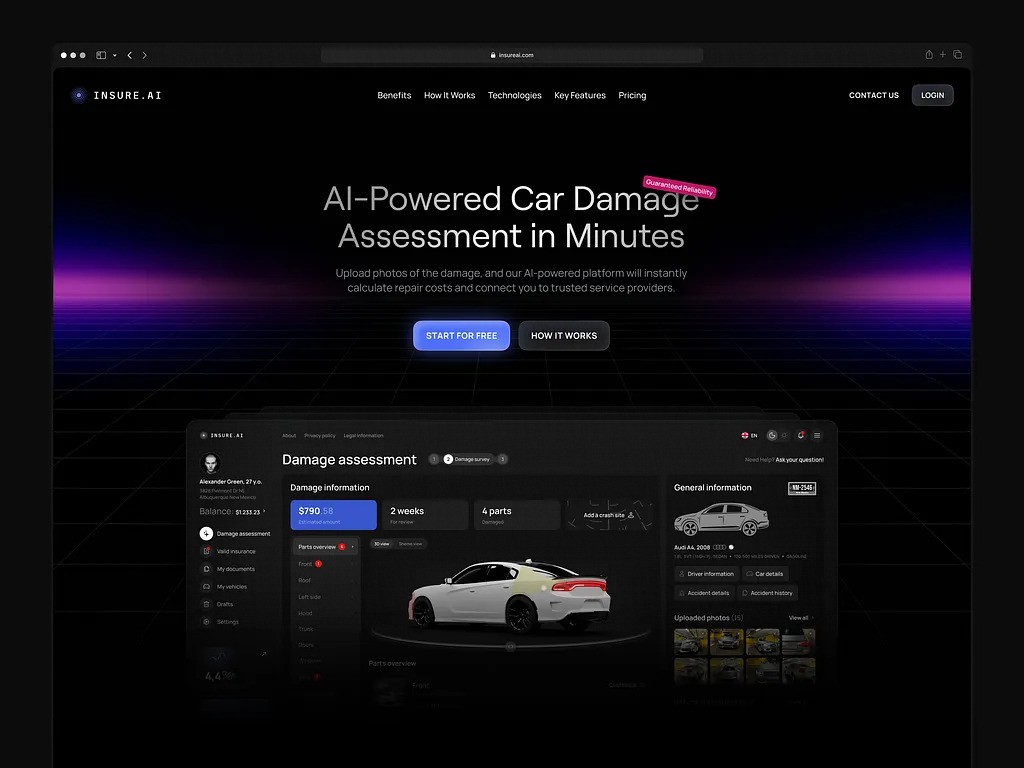

Automotive AI Insurance Website Design by Shakuro

Advanced and Hybrid Methods

Sometimes you have to venture deeper and deal with complex user experiences. Advanced and hybrid usability testing methods come to the rescue, with deeper insights into people’s behavior.

Longitudinal Studies

To get a grasp of how users’ preferences change with time, you observe them over a long period—weeks, months, or even years. Instead of a one-time usability test, you track the same group of people repeatedly while they keep interacting with your product.

Adaptability is our key strength as a species, and this quality is starring in UX design. It reveals new pain points or opportunities for improvement showing why users continue (or stop) using something. And you can see how well users adopt new features after updates or redesigns or you need to tweak them more.

Here’s an example: you are working on a fitness app. We all know that people have hard times developing a habit of doing something. They can be lazy, forget, or simply change their minds. But our task is to make them use the fitness app as long as possible. So we need to see when and why participants give up on sports. That’s why we conduct a longitudinal study.

Competitive Benchmarking

When you develop a product, researching your rivals is a must. Quite often, there is no need to reinvent the wheel—just adopt the features that work well. The same goes for UX.

Comparing your product’s usability against competitors, you face the harsh truth. That your app or website has weaknesses and areas for improvement. That competitors do better. But! At the same time, you pinpoint unique advantages that set your product apart.

The workflow is simple: conduct usability tests on both your product and competitors. The focus should be on metrics like task success rates, time-on-task, and user satisfaction.

Omnichannel Testing

If your product is cross-platform, then this type of testing is obligatory because here you check how people experience your product as a website, mobile app, physical store, etc. Rather than looking from a one-sided perspective like the website, you look at the entire user journey.

It won’t be surprising for you: they often expect the product to work and feel the same on all platforms. Same performance, same categories, and same menus, even if the screen size is two times smaller.

In omnichannel usability testing, you can notice gaps between channels that frustrate users. When polishing all these touchpoints, you improve overall user satisfaction.

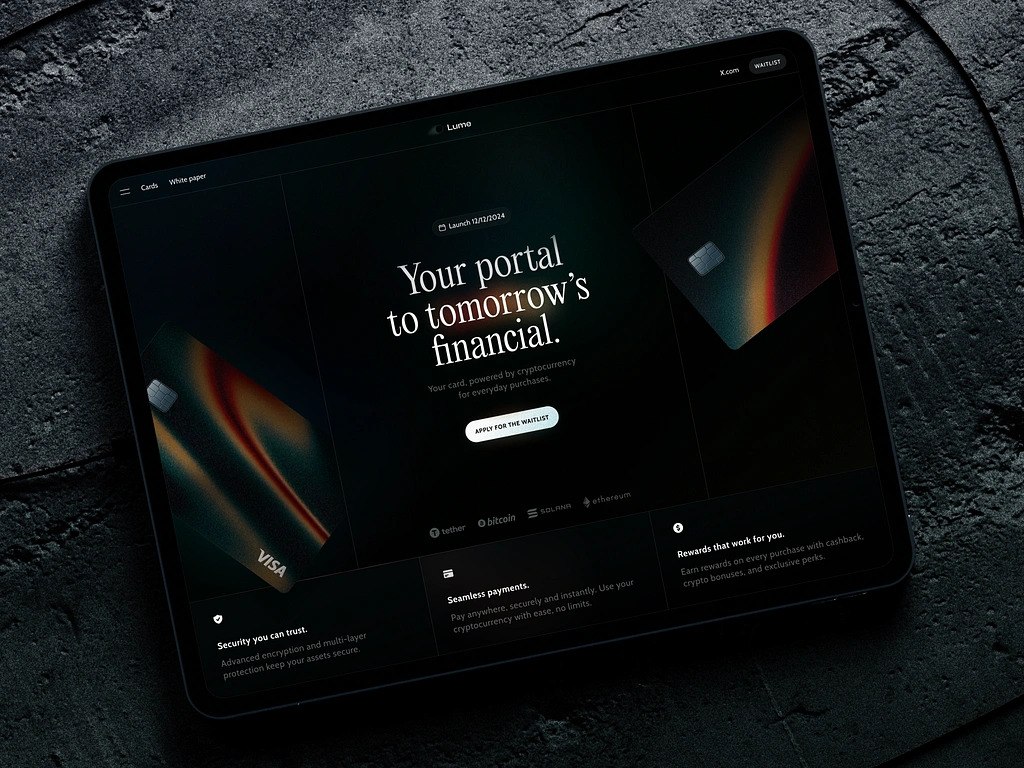

Crypto Related Marketing Website by Conceptzilla

Step-by-Step Execution Guide

Well, now that you’re armed and ready, how about some action? To put the theory into practice, here is a clear plan. You can use it as a blueprint for the sessions and start gathering insights.

Step 1: Set the Goals

It all begins with fundamental questions that lay the foundation for your research. Which parts of the product need testing? Who is the target audience? What are the key issues? Consider the latter one from the user’s perspective as well, because they are the heroes who face these problems every day. Later you can turn the info into a “skeleton” for the script.

Then think about the types of usability testing: in-person or remote, moderated or not—this will directly influence the flow of events and results.

Step 2: Recruit Participants

Based on the answers from step one, choose participants representing your target audience. Decide on relevant characteristics like demographics, skill level, device type, hobbies, etc.

Aim for 5–10 participants for most tests, as this typically covers the majority of issues. And saves your time, because testing, especially in-person, is very time- and energy-consuming. More people will drain your battery and make the results vague.

Step 3: Design Tasks

Depending on the usability testing methods, write realistic tasks that simulate real-world scenarios. In the beginning, always create a written script with all the details you might need: tasks, steps, questions, names, etc. Otherwise, it’s very easy to get confused and negatively impact the test itself.

When writing tasks, pick the ones that align with both user needs and business objectives. Stick to simple language (this again helps avoid confusion), without any jargon. A wise idea is to try out the tasks yourself, especially if you do competitive benchmarking—you have to know the rival’s product like the back of your hand. And, of course, the tasks should be achievable by the people with the set skills.

Step 4: Set Up the Environment

Prepare the place for the test. Depending on the format, the conditions and sub-steps will be different.

For instance, if you conduct an in-person test, you need a device, a quiet room with recording equipment, and recording software. For a remote test, you also need to send a participant instructions for installing essential tools. They should be free to use. Be ready to whip out a plan B with additional software if something goes wrong. And I can say, I had to switch the route more often than I wanted to.

Keep in touch with the participants in case things go south and they need to reschedule the session.

Step 5: Conduct the Test

Fully explain the purpose of the test and answer all the questions from the user. Only then you can start testing, giving one task at a time. Avoid giving hints unless the person asks you for help. And by all means, avoid taking the lead. It’s the participant who takes the test, not you.

Encourage them to think aloud (“I’m looking for the search bar…”). While the user solves tasks, you need to record observations: successes, failures, and hesitations with verbal feedback and non-verbal cues (if in-person).

Step 6: Collect Feedback and Analyze Results

When the participant finishes the test, ask them to rate their experience on a scale from 1 to 5. Then request feedback about what worked well and what didn’t.

Now comes the grinding phase: a comprehensive analysis in search of patterns and actionable insights. If you see recurring issues or areas of confusion—that’s your goal. While pointing out flaws, highlight strengths and opportunities for improvement.

Tools and Technologies

| Tool | Type | Best for | Key features |

| Zoom+Lookback | Moderated | In-depth remote interviews | Screen sharing, recording |

| UserTesting | Unmoderated | Quick qualitative feedback | Task-based testing, video&audio recording |

| Maze | Unmoderated | Prototypes & A/B testing | Interactive prototypes, surveys |

| Hotjar | Analytics | Behavior tracking | Heatmaps, session recordings |

| Optimal Workshop | Specialized | Card sorting & tree testing | Information architecture evaluation |

| Google Analytics | Analytics | Overall site performance | Traffic, demographics, conversion |

Moderated Testing Tools

As you’ve probably guessed by the name, this software is meant for moderated testing, where a facilitator needs tools for observation. They help manage sessions and record interactions with features like screen sharing and recording, real-time collaboration, note-taking, and feedback collection.

Below I’ll list software that I personally used for moderated usability tests:

- Zoom: You can set up video calls with screen recording and sharing. Works in most custom setups where you need full control over the session. Easy to install and use, people rarely need additional instructions for it.

- Lookback: Optimized for web and mobile app testing. You can capture screen activity and how participants react to the app. There are cool features for team discussions.

- GoToMeeting: Quick sessions with screen sharing and recording. What I personally like is automatic transcriptions and audio\video encryption.

- Miro: A collaborative whiteboarding tool that I often used for remote card sorting, brainstorming, or wireframing during moderated sessions.

Unmoderated Platforms

These tools have opposite goals. They help people complete tasks independently based on pre-defined scripts. Also, you can use this software for automation, participant management, automated data collection, and reporting.

Here is what I used for unmoderated usability testing:

- UserTesting: People perform tasks on your product and talk about their impressions, while the software records everything for later review.

- Maze: Interactive prototypes and surveys assist you in collecting both quantitative and qualitative data. It’s a great tool for early-stage designs or A/B testing.

- Trymata: Video\audio recordings for remote testing with minimal setup. My favorite features are team collaboration and video transcriptions.

Analytics Suites

If you are familiar with SEO strategies, you probably know most of these tools for collecting and analyzing quantitative data. They are applicable for usability testing as well. You can use long-term tracking and performance metrics. Features like heatmaps, click tracking, session recordings, funnel analysis, and conversion rate optimization will definitely complement your testing sessions.

What can I recommend from popular analytics suites? Here are some names:

- Google Analytics: A timeless classics that tracks website traffic, user demographics, and behavior metrics like bounce rates. Helps you understand overall site performance and identify high-traffic areas.

- Hotjar: All-in-one tool that combines heatmaps, session recordings, and feedback tools for comprehensive analytics. You can get both qualitative and quantitative insights.

- Crazy Egg: Generates heatmaps and scroll maps to visualize where users click and scroll. Take advantage of it to optimize layouts and call-to-action placements.

- FullStory: A comprehensive tool to get behavioral data: records entire user sessions, with the possibility to replay interactions and identify friction points.

Fintech Mobile App UI Design by Shakuro

Best Practices and Pitfalls

The effectiveness of any usability testing method depends on how well you wield it. The same goes for usability testing. I’ve already sprinkled some tips along the text but I’ll drop them all again below:

- Define clear objectives: Without a crystal clear outline and notion of what you want to achieve with the test, it’s pointless. It’ll be just a waste of your and the user’s time.

- Recruit corresponding participants: The users should match your target audience by demographics, skill level, behavior, and other relevant characteristics. No use in inviting random people just for the sake of testing.

- Write realistic tasks: Tasks should be easy to grasp and look like real-world scenarios. Participants should be able to solve them regardless of their skill level.

- Support think-aloud: Ask participants to talk about their thoughts as they interact with the product. You will better understand their decision-making process and find hidden pain points.

- Stay neutral: I know it’s tempting to help people solve tasks but stay put unless they ask you to. Avoid influencing participants even with body language like nodding. Let them figure things out on their own unless it derails the test.

- Use a mix of qualitative and quantitative data: Combine observations with metrics like task success rates and time-on-task to get a full picture.

- Test early and often: My favorite comparison is with room furniture. How to add another shelf when you already have a closet assembled? Same here: conduct usability tests throughout the whole process, not just at the end. This way, you will “add the shelf” on time.

- Document everything: Take detailed notes during sessions and record videos. Analyze them thoroughly later for findings and share insights with stakeholders.

Optimization Strategies

Together with industry-level practices, there are optimization strategies you can add to your arsenal to get more accurate results.

Prioritize critical paths

Often I feel like a captain on a sinking ship: there is an urge to plug up all drains. However, your task is quite the opposite. You need to focus only on high-priority tasks aligning with business goals or user demands.

Use prototypes early

To save the budget, put into use low-fidelity prototypes. With tools like Figma or Sketch, you can draft interactive mockups quickly and get feedback from participants without great investments.

Incorporate remote testing

Although it’s harder to get additional context in such tests, they still bring great value. You expand your reach, reduce costs, and still maintain a decent quality level.

Research analytics

Track real-world usage patterns with Google Analytics, Hotjar, or FullStory. This approach will help you find points where users drop off or, on the contrary, sections with high traffic. A quick way to optimize the layout.

Merge multiple methods

Conduct different usability tests including specialized ones with card sorting to get all-encompassing results. Remote unmoderated testing is great for starters as it’s cost-effective, but follow it up with in-person moderated sessions.

Involve your team

Share your findings with designers, developers, and other stakeholders so that everyone understands the issues and agrees on solutions. Show them highlights from sessions to show crucial points.

Common Mistakes to Avoid in User Testing

Even experienced testers fall into traps that reduce the effectiveness of their tests. I did fall too as a junior UX designer. My favorite mistake to make was helping participants even if they didn’t really ask me to. They are such a nice person, I should show them the way! Spoiler: I should not.

Well, what else?

- Ignoring participant diversity: You test only a narrow group of users. Yes, they are relevant to the target audience, but their numbers are too few. Recruit participants from diverse backgrounds, ages, and experience levels.

- Overloading participants with tasks: Do not pack everything in one test. Too many assignments will overwhelm the users and reduce the quality of feedback. Limit each session to 3–5 tasks.

- Lazy to record sessions: It makes it harder to revisit, analyze, and share findings later. Quite often my supervisors wanted to get a glimpse of some crucial clips.

- Skipping post-test talk: You miss valuable context about why users behaved the way they did. Actually, people can be shy to take the lead and express their attitude about your product. Especially during in-person sessions. So ask them.

- Overlooking accessibility: Apart from demographics, disabilities or accessibility needs are a must characteristic for your selection. Test with a variety of users, including those who rely on assistive technologies.

- Rushing to submit results: Jumping to conclusions leaves you with messed up results. Even if you have correct data, you need to devote time to analyzing it thoroughly. Get rid of wrong assumptions and discuss the info with your team.

AI Chef Mobile App by Shakuro

Frequently Asked Questions

What is usability testing?

It’s a way to check how accessible and easy to use your product is by assigning tasks to users, observing the process, and analyzing the results. In the end, you get a list of friction points that should be polished in order to improve UX.

What are the advantages of usability testing?

You find and fix pain points before the development starts, which is less expensive. Also, you get feedback based on real-life interactions done by the target audience. It’s far more valuable than any artificial tests. You can test various concepts quickly and confirm or rule out any assumptions.

How many types of usability testing are there?

There are three main divisions of usability testing. Unmoderated vs. moderated refers to whether there is someone who observes the participant. In-person vs remote corresponds to the place where a user takes the testing—in your office or at their home alone. And quantitative vs. qualitative means whether you track certain metrics or check how well a user solves the task.