The promise of leveraging deep learning sometimes feels like a double-edged sword. While the potential applications are vast—from enhancing customer experiences through personalized recommendations to optimizing supply chains with predictive analytics—the challenge lies in choosing the right tools to bring those ideas to life.

Contents:

The overwhelming number of deep learning frameworks available today can make decision-making daunting. Are you grappling with performance issues, complex architectures, or the steep learning curves associated with many frameworks? Perhaps you’ve wrestled with integrating your models into production environments seamlessly. The right framework can accelerate your development process and directly impact your project’s success.

This article aims to guide you through the maze of dl frameworks, shining a light on the most popular options available. We’ll explore their key features, advantages, and specific use cases to help you make informed decisions that will propel your projects forward.

What are Deep Learning Frameworks?

Let’s start with the general definition.

These are tools that help you create and train deep learning models more easily. They include ready-made components, like layers and algorithms, that you can use to build complex neural networks without starting from scratch.

The frameworks play a vital role in simplifying the coding process for several reasons:

- Modular Components: they come with built-in modules for various neural network layers, optimizers, and activation functions. Using these pre-defined components, you can quickly assemble complex models without writing extensive amounts of code.

- Consistency and Best Practices: most frameworks adhere to established best practices in machine learning and deep learning, promoting consistency in code structure and methodologies. This is particularly beneficial for teams, ensuring that everyone follows the same rules and that the codebase remains maintainable.

- Automatic Differentiation: dl frameworks typically include automatic differentiation capabilities, which simplify the process of calculating gradients during the training phase. This feature allows you to focus more on model architecture rather than the intricate details of optimization.

- Data Handling: Many frameworks include powerful tools for data preprocessing, augmentation, and loading. This reduces the setup time for training neural networks and helps streamline the workflow.

- Community and Support: popular toolkits often come with extensive documentation and a vibrant community. This means you can easily find resources, tutorials, and support when encountering challenges, further speeding up the development process.

How to Choose the Right Deep Learning Framework

Choosing the right tool for your next project depends on several factors. Here are some key considerations to help you make an informed decision:

- Ease of Use: look for frameworks with intuitive APIs and good documentation. This is especially important if you are a beginner. Also, a strong community can provide support, tutorials, and pre-trained models.

- Flexibility vs. Performance: some frameworks like TensorFlow and PyTorch are very flexible, allowing you to customize models extensively. At the same time, check if the framework supports speed optimizations, especially for large datasets or complex models.

- Deployment and Production: consider how easy it is to deploy models using the framework. Some offer built-in tools for production (like TensorFlow Serving). Look for compatibility with cloud platforms or other deployment environments you plan to use.

- Supported Tools and Ecosystem: some popular deep learning frameworks have a rich ecosystem of tools for data preprocessing, model evaluation, etc.

- Type of Projects: identify the nature of your projects and base your decision on it. For instance, PyTorch is often favored for research and prototyping, while TensorFlow is more common in production settings.

- Integration with Other Libraries: check compatibility with other libraries and languages you might need, such as NumPy, Scikit-learn, or even specific hardware accelerators.

- Industry Adoption: look into which frameworks are commonly used in your field or by companies you admire. This can provide insights into the framework’s robustness and features.

Popular Deep Learning Frameworks

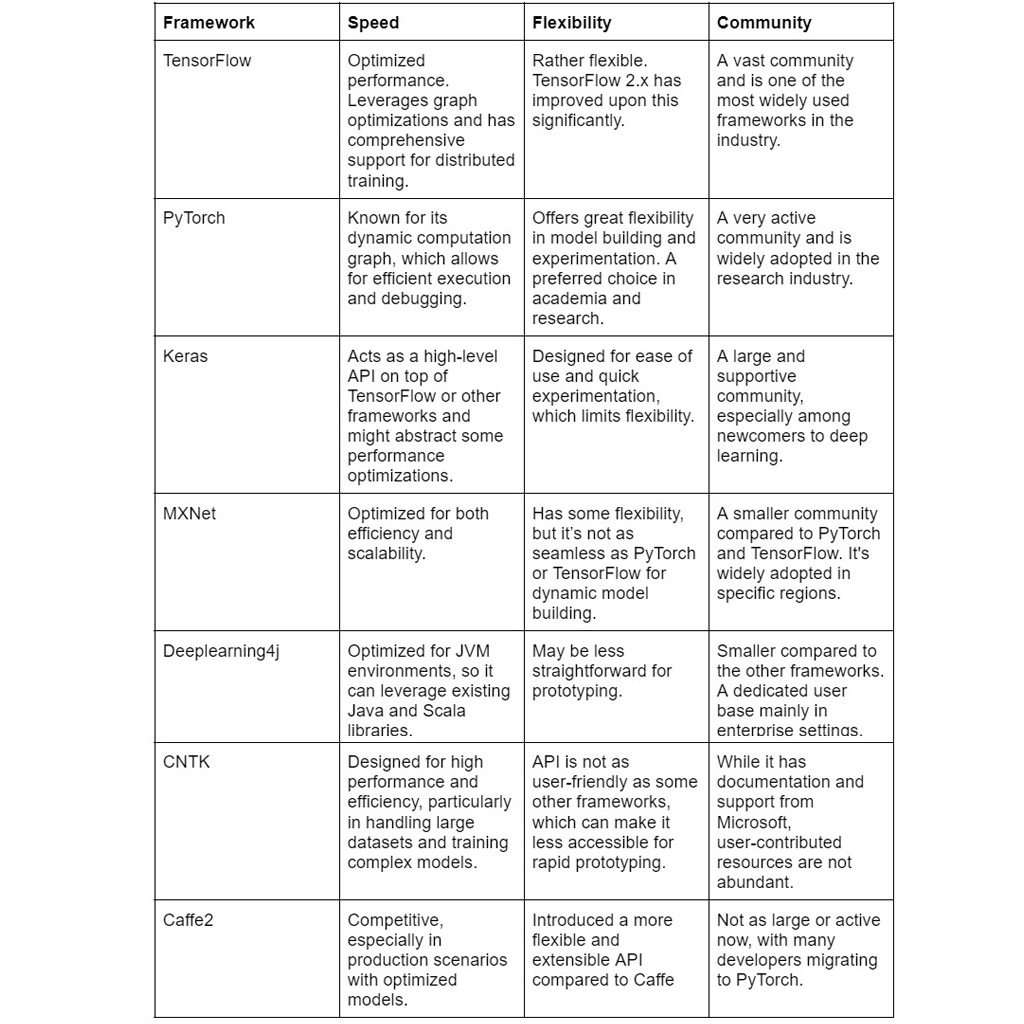

Let’s take a look at the top frameworks you can leverage. For your convenience, we packed the comparison into a table, so you can grasp the key features at a glance.

Deep Learning Framework Comparison

TensorFlow

TensorFlow is an open-source framework developed by Google. It is widely used for building and training machine learning models, particularly deep learning models.

Its extensive features and scalability make it suitable for both beginners and advanced users seeking to develop sophisticated models.

Advantages:

- High-Level and Low-Level APIs: the TensorFlow framework provides both high-level APIs for easy model building and low-level APIs for more control over model architecture.

- Flexibility: you can create complex architectures by defining custom layers, loss functions, and optimizers.

- Scalability: it is designed to run on multiple CPUs or GPUs and can be scaled up to deploy large models on cloud infrastructure.

- TensorFlow Serving: a system for serving machine learning models in production, which allows for high-performance inference.

- Ecosystem: TensorFlow includes a variety of tools and libraries such as TensorBoard for visualization, TensorFlow Lite for mobile and embedded devices, and TensorFlow Extended (TFX) for end-to-end ML pipelines.

- Community and Support: with a large community of developers and extensive documentation, TensorFlow has strong support and a wealth of resources available for users.

- Cross-Platform: the framework supports deployment on various platforms, including desktops, mobile devices, and cloud services.

Use Cases:

- Image Recognition: the TensorFlow framework provides a variety of tools, including the Keras API, which simplifies building and training convolutional neural networks (CNNs) — the backbone of modern image recognition tasks.

- Natural Language Processing: it is extensively used for various NLP tasks, including sentiment analysis, machine translation, named entity recognition, and more.

- Reinforcement Learning: you can integrate TensorFlow with various environments like OpenAI Gym, allowing you to simulate environments for training RL agents in tasks such as game playing, robotic control, or resource management.

- Time Series Analysis: time series data is often sequential, making RNNs a natural fit. TensorFlow provides tools for building RNNs and their variants (LSTMs and GRUs) to capture temporal dependencies.

Weaknesses:

- Steep Learning Curve: for beginners, understanding the intricacies of lower-level APIs can be challenging compared to other dl frameworks.

- Complexity: the extensive features and flexibility can lead to complexity in model building and debugging, especially for large projects.

- Longer Experimentation Cycle: in some cases, the need to define computation graphs can slow down the rapid experimentation cycle often required in research settings.

PyTorch

PyTorch is an open-source machine learning framework primarily developed by Facebook’s AI Research lab (FAIR). It is widely used for applications such as deep learning and artificial intelligence research due to its flexibility and dynamic computation graph capabilities. PyTorch is written in Python, so it’s easy to use for most developers.

Advantages:

- Dynamic Computation Graphs: the framework utilizes a dynamic computation graph (also known as “define-by-run”), allowing you to modify the graph on the fly during runtime. This makes it easier to debug and experiment with models, as changes can be made without recompiling the entire graph.

- Tensors: PyTorch provides a tensor library that offers similar functionality to NumPy but adds GPU acceleration. This means that computations on tensors can be performed on either the CPU or GPU, leading to significant performance improvements for large-scale machine-learning tasks.

- Easy to Use: PyTorch’s API is designed to be intuitive and user-friendly, which helps in the rapid prototyping of models. Its design is Pythonic, so if you know Python, it will be easy to understand and implement machine learning algorithms.

- Strong Community and Ecosystem: it has a large and active community, along with extensive documentation and resources. It has various extensions, libraries, and tools built around it, such as torchvision for computer vision tasks and torchaudio for audio processing.

- Interoperability: you can integrate it with other libraries and frameworks, including NumPy, SciPy, and many others, making it a versatile option for building machine learning models.

- Visualization Tools: PyTorch supports visualization through libraries like Matplotlib and tools like TensorBoardX, allowing users to visualize training progress, model architecture, and other metrics.

- Support for Distributed Training: the deep learning framework has built-in support for data parallelism and distributed training, facilitating scaling across multiple GPUs and machines.

- Research-Oriented: given its flexibility and ease of experimentation, PyTorch is particularly popular among researchers in the field of machine learning and deep learning, making it the go-to framework for many academic projects and papers.

Use Cases:

- Computer Vision: PyTorch is widely used for image classification, object detection, and generative modeling.

- Natural Language Processing: Its dynamic nature is well-suited for tasks like language modeling, machine translation, and sentiment analysis.

- Reinforcement Learning: PyTorch’s flexibility allows researchers to experiment with complex environments and agent behaviors.

- Generative Models: many generative adversarial networks (GANs) and variational autoencoders (VAEs) are implemented using PyTorch due to its ease of use.

Weaknesses:

- Deployment Challenges: it has not been as robust as TensorFlow in terms of deploying models to production environments, particularly in large-scale applications. The model serving options have been less mature compared to TensorFlow Serving, making the task more complex.

- Speed and Performance: when working on certain tasks and models, you may benefit more from TensorFlow’s optimizations. Also, there can be performance issues while handling very large batch sizes or specific workloads.

- Ecosystem Maturity: it’s less mature in comparison to TensorFlow. There are still cases where you might find it harder to access pre-trained implementations of popular architectures, and the range of available libraries and tools may sometimes not be extensive.

- Learning Curve for Beginners: the dynamic computation graph can confuse beginners and complicate debugging.

- Memory Consumption: PyTorch can consume more GPU memory than TensorFlow for similar tasks, especially when not optimized carefully.

Neural network design concept by Shakuro

Keras

Keras is an open-source framework that provides a high-level interface for building and training deep learning models. It is designed to simplify the development of neural networks and is user-friendly, making it accessible for both beginners and experts.

Advantages:

- User-Friendly API: with simple and intuitive API you can quickly create and experiment with deep learning models.

- Modularity: you can easily combine and configure various components like layers, optimizers, and loss functions.

- Support for Different Backends: the Keras framework can run on top of popular deep learning frameworks like TensorFlow, Theano, and Microsoft Cognitive Toolkit (CNTK).

- Extensive Pre-trained Models: it provides access to several pre-trained models, which can be fine-tuned for specific tasks.

- Flexibility: while Keras offers simplicity, the framework also allows for detailed customization for users who require more control.

Use cases:

- Image Classification: it is frequently used for building convolutional neural networks (CNNs) to classify images from datasets like CIFAR-10, MNIST, and ImageNet.

- Object Detection: Models like SSD (Single Shot Detector) and YOLO (You Only Look Once), which can identify and locate multiple objects in images, can be implemented using Keras.

- Natural Language Processing: the Keras framework supports Recurrent Neural Networks (RNNs) and Long Short-Term Memory (LSTM) networks for tasks such as sentiment analysis, text generation, and language translation.

- Generative Models: It allows for the creation of generative models like Generative Adversarial Networks (GANs) for tasks such as image generation, data augmentation, and style transfer.

- Time Series Forecasting: Keras can be used to build models for forecasting future values in time series data, applicable in finance, weather prediction, and resource allocation.

Weaknesses:

- Performance Limitations: it is built on top of other frameworks like TensorFlow or Theano. While the tool provides an easier interface for building models, it may not be as optimized for performance as directly using these underlying frameworks.

- Flexibility and Customization: this deep learning framework is designed for ease of use, which can sometimes limit the flexibility needed for highly customized models or complex architectures.

- Debugging: it can be more challenging compared to using native TensorFlow or PyTorch. The high-level API can obscure some of the underlying operations, making it harder to trace issues.

- Limited Lower-Level Control: Keras abstracts many details that advanced users might want to control, such as optimizer behaviors or layer-wise computations. If you need fine-grained control over these aspects, you may find it limiting.

- Memory Management: the framework can be less efficient in resource allocation and memory management compared to others. You can run into memory issues when training large models or using large datasets if you don’t optimize your models accordingly.

- Limited Use for Non-Sequential Models: While Keras provides excellent support for sequential models, creating non-sequential models can be more cumbersome and less intuitive.

MXNet

It is an open-source deep learning framework designed for efficiency, flexibility, and adaptability, making it suitable for various applications, from research to production. Developed by the Apache Software Foundation, MXNet is particularly known for its scalability and support for multiple programming languages.

Advantages:

- Dynamic and Static Computation Graphs: MXNet supports static and dynamic computation graphs, allowing you to define computation on the fly or create graphs in advance. This flexibility caters to various use cases, enabling rapid prototyping and optimization.

- Multi-Language Support: it provides interfaces for several programming languages, including Python, Scala, Julia, R, and others.

- Scalability: the framework scales efficiently on multiple GPUs and across distributed environments. It is particularly well-suited for training large-scale models and handling big data.

- Performance Optimization: MXNet is optimized for efficient memory usage and computational performance. It utilizes techniques like symbolic execution and automatic differentiation to optimize deep learning operations.

- Gluon API: it provides a flexible and expressive interface for defining models, similar to frameworks like Keras. Gluon allows you to build and train neural networks more intuitively and efficiently.

- Model Zoo: there is a collection of pre-trained models for various tasks, which can be fine-tuned and used for specific applications, saving time and computational resources.

- Community and Ecosystem: being an Apache project, MXNet has a community-driven development model, with contributions from researchers and developers worldwide. This fosters a growing ecosystem with various tools and libraries.

- Support for Mixed Precision Training: it supports mixed precision training, resulting in reduced memory usage and potentially faster training times on compatible hardware.

Use Cases:

- Image and Object Recognition: MXNet is often used for image classification, object detection, and segmentation tasks.

- Natural Language Processing: it is suitable for various NLP tasks like sentiment analysis, language translation, and text classification.

- Reinforcement Learning: this dl framework supports the reinforcement learning app development, making it suitable for complex tasks like robotics and game playing.

- Time Series Forecasting: you can utilize it for forecasting tasks involving sequential data.

Weaknesses:

- Community and Ecosystem: a smaller community compared to more popular frameworks like TensorFlow and PyTorch. So there are fewer community-contributed resources, tutorials, and support options, making it harder for newcomers to find help or learn.

- Documentation: it can be less comprehensive or less user-friendly compared to the extensive documentation available for frameworks like TensorFlow and PyTorch. This can be a barrier for beginner developers trying to understand specific functionalities or troubleshoot issues.

- Complexity for Beginners: the dual nature of MXNet’s static and dynamic computation graphs can be confusing for beginners. While flexibility is a strength, it may also make it harder for newcomers to grasp the concepts quickly.

- Less Adoption in Research: in terms of MXNet vs PyTorch, the first one has seen less adoption in academic research compared to other deep learning frameworks. This is partly due to the popularity of PyTorch in research environments, where the dynamic computation graph and ease of use have made it the preferred choice for many researchers.

- Performance Tuning: while MXNet is generally performant, achieving optimal performance often requires considerable configuration and tuning. You may need to invest time in optimizing the models and environment to get the best results.

- Fewer Pre-trained Models: there are fewer pre-trained models compared to other frameworks that have larger user communities.

Social Web Platform for Developers by Shakuro

Deeplearning4j (DL4J)

It is an open-source framework for the Java Virtual Machine (JVM), designed for building, training, and deploying deep learning models in enterprise environments. With a focus on integration within the broader Java ecosystem, Deeplearning4j is particularly suitable for developers and organizations already working within Java-based applications.

Advantages:

- Java and Scala Integration: DL4J is natively designed for Java and Scala, making it accessible for developers already familiar with these languages. Also, the deep learning framework provides seamless integration with existing Java applications and enterprise systems.

- Support for Deep Learning Models: Deeplearning4j supports a wide range of machine learning algorithms and neural network architectures, including feedforward networks, CNNs, RNNs, and more. This versatility allows you to implement solutions for various applications, such as image classification, natural language processing, and time series forecasting.

- Distributed Training: DL4J is capable of distributed training across a cluster of machines, which helps speed up the training process for large datasets.

- Integration with Other Libraries: Deeplearning4j is part of the broader Skymind ecosystem, which includes additional libraries such as ND4J (for N-dimensional arrays in Java), DataVec (for data preprocessing and transformation), and Arboc, a library for reinforcement learning.

- Automatic Differentiation: it supports automatic differentiation, which simplifies the process of calculating gradients during backpropagation when training neural networks.

- Model Serialization: allows easy model serialization, enabling trained models to be saved and loaded for deployment in production applications.

- Support for Various Data Sources: The deep learning framework can deal with a variety of data sources—including SQL databases, NoSQL databases, and Hadoop-based data stores—making it flexible for enterprise data architectures.

- Use in Production: with features that prioritize ease of integration and scalability, Deeplearning4j is well-suited for deployment in production environments. It also supports microservices architecture and can be integrated with cloud platforms.

Use Cases:

- Financial Services: for applications such as fraud detection, credit scoring, and algorithmic trading.

- Healthcare: using models for medical diagnosis, patient monitoring, and drug discovery.

- Retail: for demand forecasting, recommendation systems, and customer segmentation.

- Manufacturing: for predictive maintenance and quality control processes.

Weaknesses:

- Complexity for Beginners: DL4J can be complex for beginners who are new to deep learning and Java development.

- Community and Ecosystem Size: the community is rather small and offers fewer resources, tutorials, and community-driven solutions for troubleshooting common problems.

- Limited Model Availability: while DL4J supports a variety of neural network architectures, it may not have as extensive a set of pre-trained models and resources as some other frameworks.

- Performance Compared to Other Frameworks: in certain scenarios, DL4J may not match the performance optimizations seen in other frameworks (e.g., TensorFlow or PyTorch). Still, it varies based on the specific use case, model architecture, and implementation details.

- Integration with Non-Java Ecosystems: while DL4J excels in Java and JVM environments, integrating with other ecosystems (like Python-based frameworks) may not be as seamless.

- Feature Maturity: as an open-source project, some features are not as mature or fully supported compared to well-established frameworks.

- Industry Adoption: it is not widespread so there can be challenges in finding experienced developers or practitioners familiar with the tool.

Microsoft Cognitive Toolkit

The next one on our deep learning framework comparison list is the Microsoft Cognitive Toolkit, also known as CNTK. It is an open-source tool developed by Microsoft. It is designed to facilitate the creation, training, and evaluation of deep learning models for various tasks in AI.

Advantages:

- Flexible Architecture: CNTK supports a variety of deep learning models, including feedforward, convolutional, and recurrent neural networks. It can be used for both supervised and unsupervised learning tasks.

- Performance: CNTK is optimized for performance and scalability, enabling efficient training on multi-core CPUs and GPUs. It uses a highly efficient computation graph and can leverage parallel processing.

- Support for Multiple Languages: while primarily used with Python, CNTK also provides APIs for C#, C++, and Java, allowing developers to use the framework according to their preferred programming language.

- Deep Learning Toolkit: it includes a comprehensive set of tools for creating and training neural networks, as well as for evaluation and deployment of trained models.

- Integration with Other Tools: you can easily integrate the tool with other Microsoft products, such as Azure Machine Learning, making it suitable for enterprise applications.

- Model Export: you can export models to the ONNX (Open Neural Network Exchange) format, allowing for interoperability with other deep learning frameworks and platforms.

- Distributed Training: the framework supports distributed training across multiple machines and devices, which is especially useful for large-scale machine-learning tasks that require significant computational resources.

Use cases:

- Image Recognition: CNTK is used in computer vision tasks, such as image classification and object detection.

- Natural Language Processing: It is also applied in NLP tasks, including speech recognition, text classification, and sequence modeling.

- Reinforcement Learning: CNTK supports reinforcement learning algorithms for tasks where agents learn through trial and error.

Weaknesses:

- Steeper Learning Curve: If you are a beginner, you may find CNTK’s documentation and APIs less intuitive.

- Community Support: the community using CNTK is relatively small.

- Development Activity: Microsoft has shifted focus towards other machine learning tools, such as Azure ML and the ONNX ecosystem, leading to less active development of CNTK.

Stock Market Analytics Application by Shakuro

Caffe and Caffe2

They are both deep learning frameworks that were created with a focus on performance and ease of use, particularly in the context of deep learning applications.

Caffe was developed by the Berkeley Vision and Learning Center (BVLC) and was first released in 2013. It is open-source and designed for speed and modularity, primarily aimed at computer vision tasks.

As a successor to Caffe, Facebook developed Caffe2, aiming to further enhance flexibility, scalability, and performance for deploying machine learning models, particularly in production environments. It was first introduced in 2017 and was designed to support both research and production.

Following the merger, the functionalities of Caffe2 were consolidated into PyTorch, making it the primary framework for most developers. So Caffe2 is no longer independently developed or maintained.

It comes as no surprise that due to the rise and consolidation of frameworks like PyTorch, Caffe and Caffe2 have seen a decline in their independent usage, as many users have transitioned to other more versatile tools that support a wider range of applications.

Future Trends in Deep Learning Frameworks

The field of deep learning is evolving rapidly, and several trends are emerging in the development and use of deep learning frameworks:

Increased Modularity and Flexibility

Future frameworks are likely to adopt even more modular architectures, allowing developers to easily mix and match components (layers, optimizers, etc.) to facilitate experimentation.

Frameworks that support both static and dynamic computation graphs will gain more popularity, enabling both efficient deployments for production (static) and flexibility for research (dynamic).

Better Integration with Other Technologies

As machine learning integrates more with fields such as reinforcement learning, natural language processing, and computer vision, deep learning frameworks are expected to provide better interoperability with these technologies. Integration with emerging technologies like quantum computing and neuromorphic computing is also anticipated.

Focus on Accessibility and Usability

Frameworks will continue to improve regarding documentation, tutorials, and high-level APIs to lower the barrier to entry for people with little industry-related knowledge. User-friendly interfaces with tools for visualizing model architecture and performance will likely become standard features.

Optimized Models for Edge Computing

With the rise of Internet of Things (IoT) devices, there will be a focus on developing frameworks that can easily optimize models for edge devices, which have limited processing power. Techniques for model compression, pruning, and quantization will become more prevalent, allowing for efficient deployment in real-time applications.

Emphasis on Energy Efficiency

As deep learning becomes more ubiquitous, the energy consumption of training and inference processes will be a significant concern. Future frameworks will likely include built-in optimizations for reducing energy usage during model training and inference.

Automated Machine Learning (AutoML)

The integration of AutoML features into deep learning frameworks is set to rise, enabling automated hyperparameter tuning, model selection, and architecture search. This trend will help streamline the model development process and make it accessible to a broader audience.

Cross-Disciplinary Collaboration

Collaboration between researchers in different domains (e.g., healthcare, finance, etc.) and deep learning framework developers will lead to the creation of specialized tools tailored for various industries. Frameworks may offer specific libraries or modules designed for particular applications, enhancing their utility and effectiveness in various sectors.

Continued Evolution of Community-Driven Development

Open-source contributions will continue to shape the development, with growing communities pushing for new features and improvements. Initiatives to convert research codebases into robust frameworks will become more common, bridging the gap between cutting-edge research and practical application.

Conclusion

Choosing between popular deep learning frameworks is a critical step that can significantly influence the success of your machine learning projects. The ideal framework should align with your specific needs, whether you prioritize ease of use, flexibility, or advanced features.

When choosing a framework, consider factors such as project requirements, your familiarity with it, available community support, and the type of deployment you anticipate. We hope this deep learning framework comparison will help you make an informed choice that sets the stage for successful endeavors.

Looking for a skilled development team? Contact us and let’s work together on your next project.