You’ve built your e-commerce website for 100 potential users, but after scaling, you often face a traffic spike up to 1000, and it breaks your systems? Each second of downtime decreases conversion, and buying another server doesn’t solve the issue. Or maybe, as a fintech business, you suffer from unexpected RPS spikes? The delays in transaction processing lead to financial losses for both you and your clients. Even fines.

Contents:

We asked our Lead .NET Architect, Denis Strukachev, who built high-traffic systems for platforms serving millions daily, to share the secret ways they stay resilient. He revealed what high load really means in .NET projects, optimization and scaling strategies, architectural approaches, etc.

In this interview, you’ll learn the real patterns behind scalable high-load .NET architecture we use daily in our projects.

Introduction—Who the Expert Is and Why This Topic Matters

The expert’s background and flagship high-load projects

Denis Strukachev has been writing in .NET since 2006. First, he worked on Windows applications; then, starting in 2014, he switched to web services.

One of his major projects related to high load was a project for purchase accounting and control. Key figures:

- 5 services within the working domain

- more than 1000 RPS for the central service

- services deployed in two clusters, 2-3 pods in each cluster, using Kubernetes

- high availability requirements, classified as business critical, 24-hour support

- target SLA was 99.8%

The types of scalability challenges clients bring to Shakuro

Usually, they require high availability associated with distribution. So we need to optimize performance in the bottlenecks, such as databases, APIs, data consistency, and partition tolerance.

Now, time to begin our .NET architecture interview!

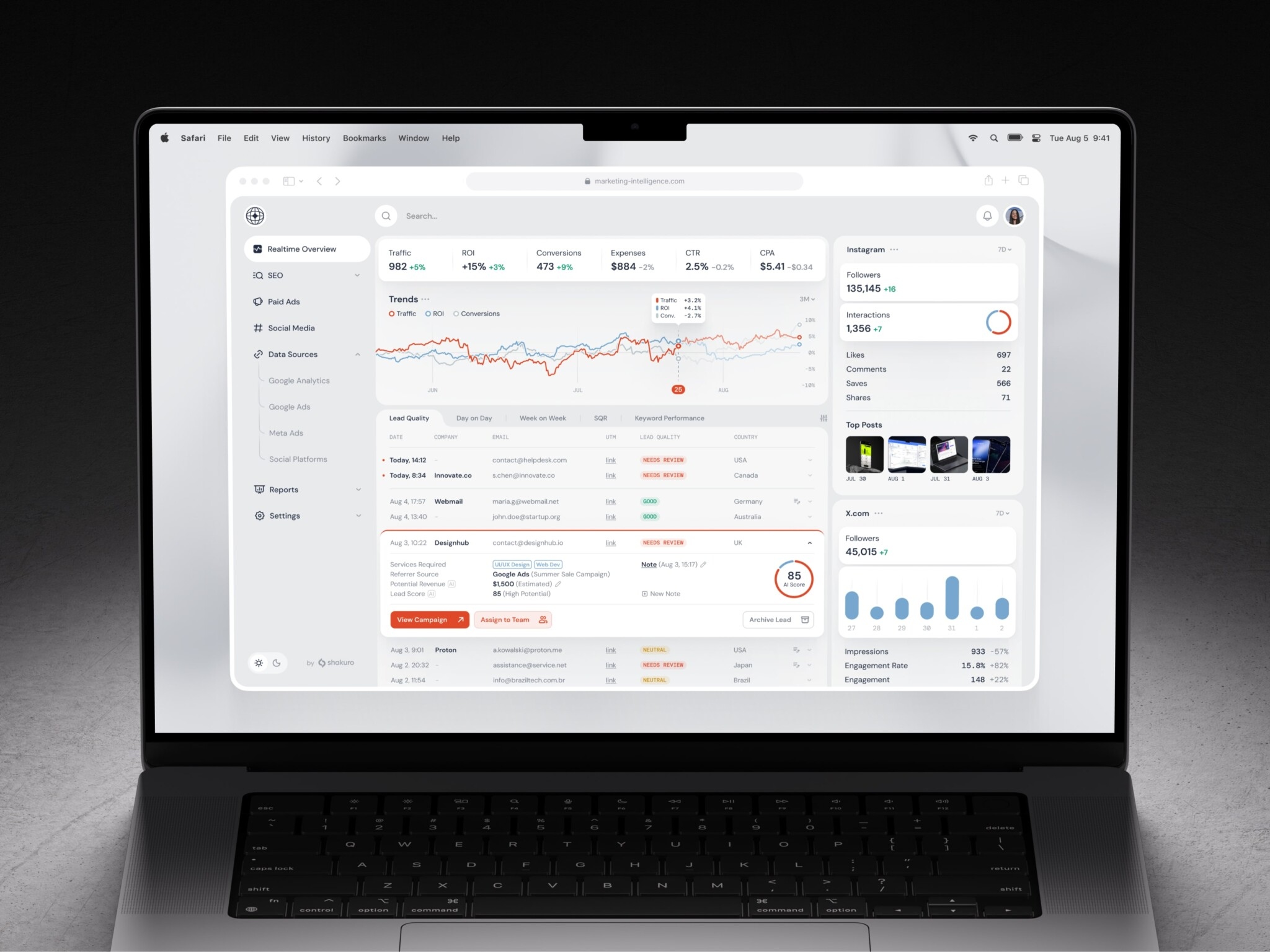

Real-Time Predictive Analytics Dashboard Design by Shakuro

“High-Load Isn’t Just About RPS.” What Does It Really Mean in .NET Projects?

What is high load?

In my opinion, high load is inextricably linked to availability requirements and SLA indicators. This, in turn, requires the collection of metrics and their careful analysis. In addition to RPS, high-load indicators are also influenced by the analysis of percentiles (P95 and P99) for latency and other parameters, error rates, etc. A high-load .NET architecture must be ready for peak loads and be responsive to user number growth and the volume of data processed.

High load is inextricably linked to availability requirements and SLA indicators. This, in turn, requires the collection of metrics and their careful analysis. © Denis Struckachev

What metrics actually matter?

The tail latency is a crucial metric, along with the 95th percentile, overall PRS, PRS by response codes, throttling, etc.

In other words, P95 and P99 are essential for analysis. They show how the system behaves in rare but critically slow cases that average metrics (mean or median) hide. These metrics allow you to identify problems in a timely manner and take action before they become apparent to all users.

What are the typical bottlenecks you often notice in pre-optimization client systems?

Oh, there are many. For example, an increased number of database queries (N + 1 problem), lack of necessary indexes, incorrectly implemented retry policy, lack of consistency in the end result, lack of cache, or incorrect cache configuration. In rare cases—memory leaks, although everything is fine with this in .NET now.

Why Is .NET a Strong Choice for High-Load Architectures?

What are the strengths of .NET?

Garbage collector, deep support for asynchrony, static typing, and compilation. It’s well-optimized, especially in the latest .NET—the framework is really fast. I can also highlight continuous development and a fairly large number of libraries (databases, loggers, brokers, etc.).

Are there any real-world scenarios where .NET outperforms other stacks?

.NET high performance excels in scenarios requiring sustained throughput with low latency and efficient resource utilization, especially in backend services handling complex business logic under heavy concurrency.

For example, large-scale e-commerce platforms during flash sales or global media streaming services managing millions of concurrent API calls (like user authentication, content metadata delivery, or recommendation engines) often see .NET outperform interpreted or dynamically typed stacks.

Thanks to its highly optimized runtime, native async/await support, compile-time safety, and tight integration with Kubernetes and cloud-native tooling via ASP.NET Core, the solution delivers consistent performance with lower server costs and predictable scaling.

.NET high performance excels in scenarios requiring sustained throughput with low latency and efficient resource utilization. © Denis Struckachev

What Architectural Approaches Work in .NET for High-Load Systems?

When is a monolith perfectly acceptable, and when are microservices necessary?

Don’t mindlessly break everything down into microservices. A microservice should cover a specific functional domain. Services that are too small (such as those found in various textbooks or courses) will only create problems.

A microservice should be separated if:

- There are clearly defined functions in a separate domain

- Separate fault tolerance strategies are required

- You need a different stack

- Sharing of dedicated service functions for other services.

In other cases, it is sufficient to have a good modular monolith.

How do you use EDA with tools such as Kafka or RabbitMQ to decompose and scale high-load services in .NET?

You should use brokers when there is asynchronous interaction between services, when you have consistency requirements, or when you need to create scalable .NET applications. They ensure message delivery (delivery semantics) to end users, offer easy scaling or operation parallelization between instances, and improve service performance.

Which architectural patterns are most effective in preventing common bottlenecks?

CQRS and Outbox (Inbox) are the most common patterns I have used in projects.

The first allows you to use a command and request distribution architecture, thereby reducing the load on database nodes and distributing reading. The leading node for writing and slave replicas for reading with synchronous replication allow you to significantly increase performance.

With Outbox (the opposite of Inbox), you can ultimately achieve service consistency. It is fairly easy to implement the retry algorithm.

CQRS and Outbox (Inbox) are the most common patterns for preventing common bottlenecks. © Denis Struckachev

Scaling Strategies: Preparing Applications for Growth

How to understand when you should scale? How to choose between horizontal and vertical scaling?

The best way to understand whether it’s time to scale up is to look at metrics: 95th and 99th percentile latency, memory consumption, frequency, and number of errors.

If you use brokers like Kafka, a clear indicator is the lag in message processing when messages are stuck in the queue. As for the Outbox pattern, an important indicator is the time it takes to clear the queue in the database.

When choosing between horizontal or vertical scaling, you need to understand what exactly you need to scale in distributed systems .NET. If the problem is with service operations, you can increase the number of pods. If the problem is with connections or memory/processor consumption on the database server, then think about increasing resources and optimizing queries (or storage architecture).

As a rule, during peaks, you can increase memory and processor power, then conduct a post-mortem and make an architectural decision.

How did Kubernetes and cloud-native .NET change the approach to auto-scaling?

Thanks to Kubernetes, it has become much easier to automate the scaling process. Instead of doing everything manually, you can automate it for a more rapid response to metrics.

How do you use distributed caching and message brokers as scaling levers?

Brokers are asynchronous processing that reduces response time for users, parallelizes message processing between instances, and implements a retry mechanism.

Redis allows you to cache data, reducing database access, and enables distributed locking, thereby stabilizing the operation of distributed jobs, etc.

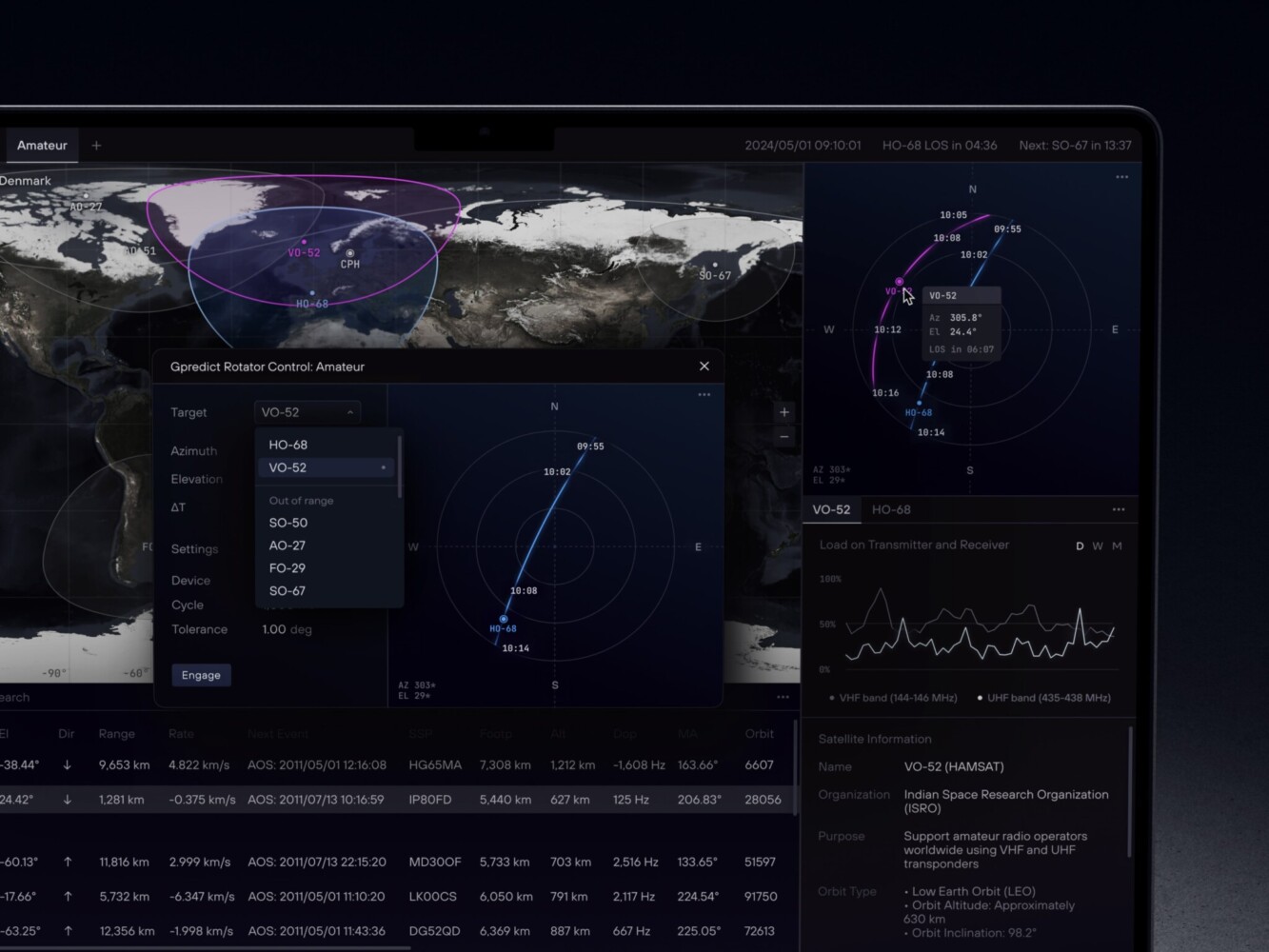

TT Hoves in Satellite Monitoring and Management by Shakuro

“Optimize Before You Add More Servers.” Performance Tuning in .NET

What are the main tactics for significantly reducing the load?

My tactics for .NET performance optimization are simple. You should try to minimize the creation of large objects in memory (LOH) and allocation (for example, strings), as well as avoid using sync-over-async.

When working with a database, you should try to use a connection pool and context pool, even though this is not always optimal. And use objects such as ArrayPool and Span. If it is possible to stream data, use tools such as IAsyncEnumerable. Of course, avoid blocking.

What is your usual profiling workflow?

Mostly, I rely on OpenTelemetry metrics. I very rarely resort to taking trace dumps.

What are the most common anti-patterns that critically undermine performance?

In my practice, the most common ones are:

- sync-over-async

- N + 1 problem

- No caching where it’s needed.

Sync-over-async, the N + 1 problem, and the absence of caching where it’s needed are the most common anti-patterns. © Denis Struckachev

The Data Layer in High-Load Architectures

What are the main criteria for choosing between an SQL database and a NoSQL solution?

I would say that the main criteria are scalability and the absence of a rigid data schema.

How do you choose the appropriate strategy among replication, sharding, and partitioning for scaling the data layer?

If, when designing a high-load .NET architecture, it is clear that there are significantly more read operations than write operations, it would be more logical to divide the system into a main database (for writing) and replicas (for reading). Synchronous replication is preferable.

Partitions are useful for splitting enormous tables. Indexes become smaller, with each separate partition having its own index. It is very easy to delete old data—just delete the partition. It is relevant, for example, for outbox/inbox tables, when you need to store some history of recent records and the old ones are no longer needed.

If you have distributed systems .NET and you can logically divide data into different databases, then sharding will be a good solution. However, it’s not easy. There are many difficulties, for instance, choosing a sharding algorithm or distributing queries and transactions between shards.

Which is better—EF Core or Dapper—for performance-critical systems?

According to the latest tests, EF Core has almost caught up with Dapper. But it is more important to analyze the service and the tasks it solves.

If the service contains minimum business logic with no complex architecture and requires a precisely tuned, configured database query (a job or data download service), then Dapper is a go-to. In other scenarios, where pre-existing entities and classes are available for use, EF Core is the optimal choice. It has a number of settings that let you minimize query execution time.

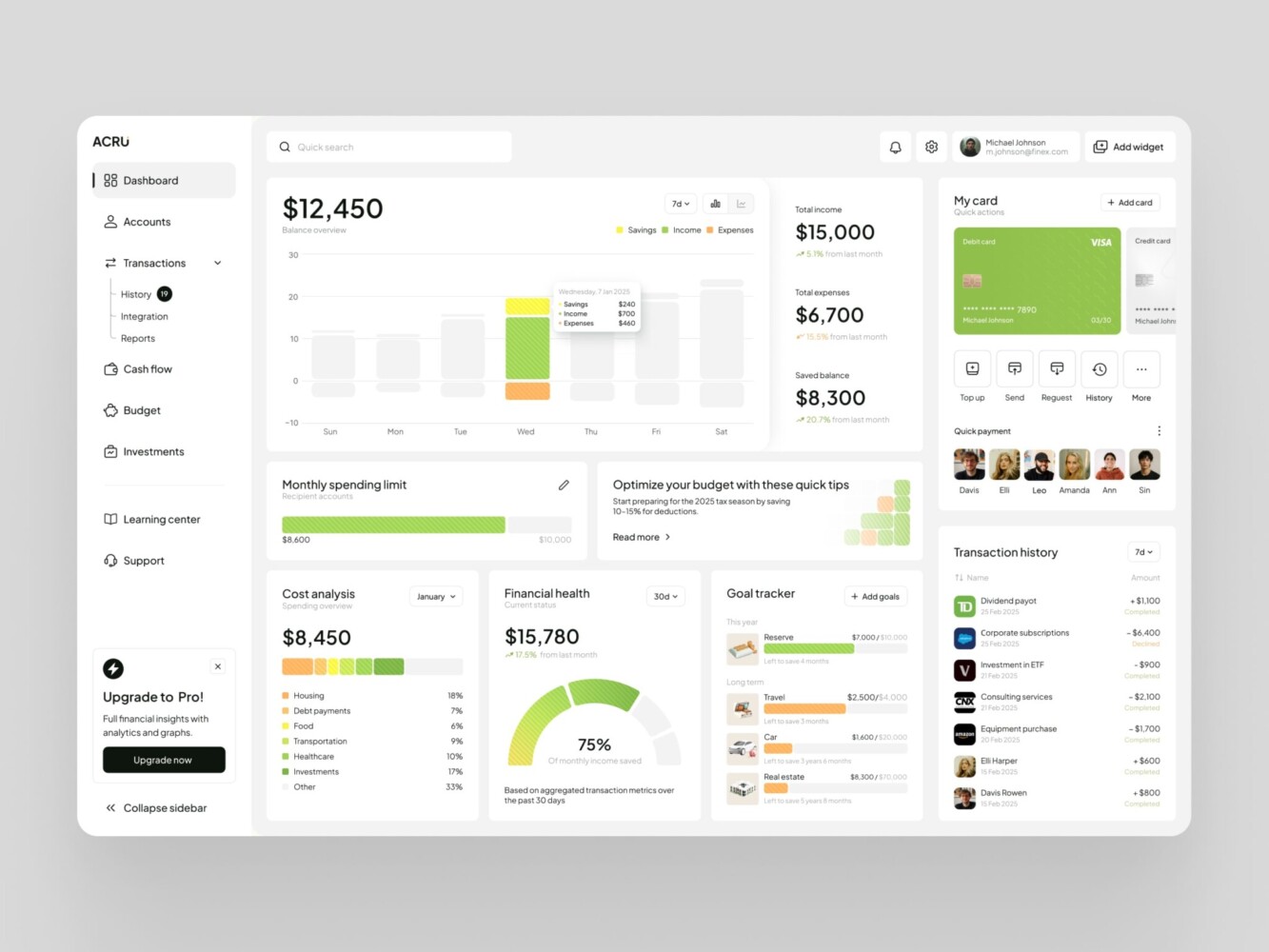

Dashboard Design for Personal Finance Management by Shakuro

Building Reliability & Fault Tolerance Into .NET Systems

Do patterns such as circuit breakers and exponential backoff directly contribute to the stability of the entire high-load ecosystem?

These patterns are extremely useful and allow you to controllably reduce the load on other services with which the main service interacts.

What is an example of a graceful degradation pattern to maintain the core UX even when a critical upstream dependency fails?

First and foremost, a properly configured cache and fallback is a must. If you fail to access the underlying service, you can apply the same Circuit Breaker and return the value from the cache or fallback to the client.

What does your team do on the first day to ensure that the system is designed from the outset with failures and fault tolerance in mind?

In enterprise .NET development, we perform metrics analysis, project code analysis, and bottleneck identification for database and external services.

Observability: Seeing Problems Before They Hit Users

What are the main components of the observability stack that your team uses with .NET today, and how do they work together to provide a complete picture?

As a rule, we use all three (metrics, logs, and traces). We apply logs to analyze what is happening inside the program. Traces help you perform a complete analysis of the call path to all related services and components (broker, database query). And metrics show the state of the system (memory consumption, latency, etc.).

Why has OpenTelemetry become the standard, and what advantages does it offer over proprietary solutions?

It provides a vendor-neutral, open-source framework for collecting metrics, logs, and traces across heterogeneous systems. Major cloud providers, observability vendors, and language communities (including .NET) support OpenTelemetry. It eliminates lock-in and ensures consistent telemetry regardless of deployment.

Compared to proprietary solutions, OpenTelemetry offers greater flexibility, reduced costs, and future-proofing. You can route data to multiple backends, avoid dependency on a single vendor’s SDK, and leverage a unified instrumentation approach across services. In .NET for high-load systems, this means less overhead, standardized context propagation (like Activity and Baggage), and seamless integration with modern platforms without sacrificing performance or control.

Compared to proprietary solutions, OpenTelemetry offers greater flexibility, reduced costs, and future-proofing. © Denis Struckachev

How do you determine what “good observability” looks like, and what metrics or alerts are mandatory for a critical service?

We analyze P95 and P99 for latency and error codes. Also, it’s a good practice to analyze cache or fallback hits, message lag in the broker, and system health checks.

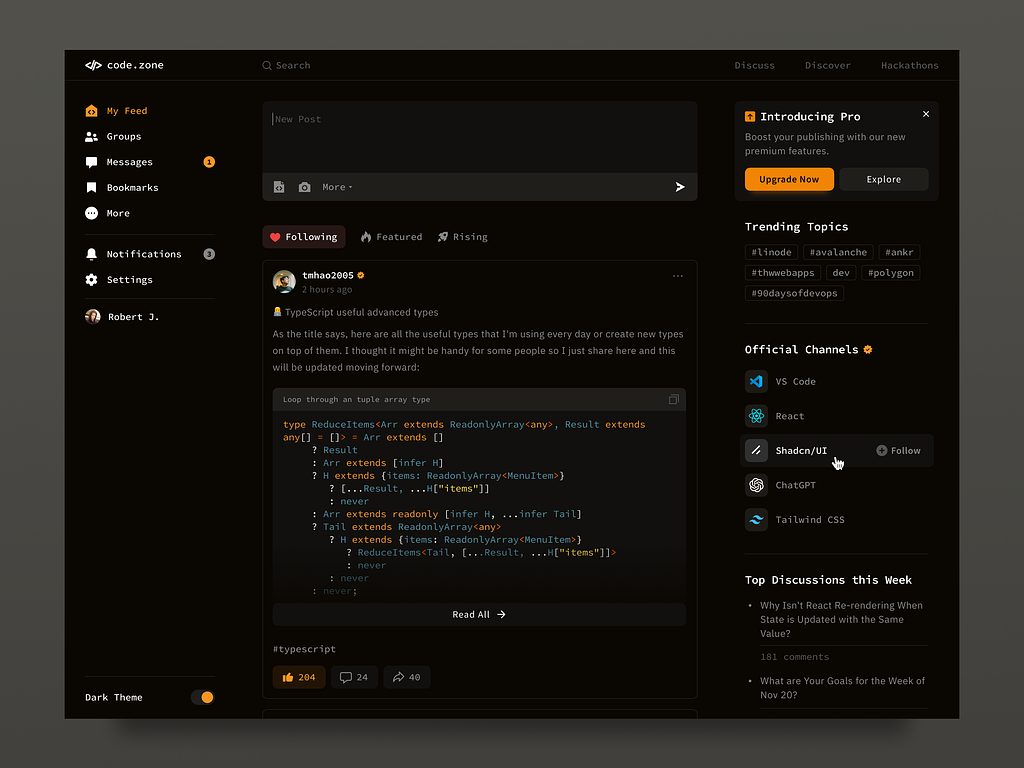

Social Web Platform for Developers by Shakuro

Real Case Study: A High-Load Problem We Solved at Shakuro

One of the scalable .NET applications I worked on had an issue: the system generated a large number of events that had to be sent to Kafka via Outbox.

The challenge and initial system state

When hundreds of thousands and millions of events came in, there was a problem sending them to Kafka. We needed to ensure high semantics of exactly-once delivery. Tried out Outbox. But there were so many events that it couldn’t keep up with sending them. In addition, cleaning up old events caused frequent Autovacuum calls in Postgres, which also degraded database performance.

I learned all the information about the problem from logs and metrics (database timeout error metrics, autovacuum launch metrics, database load, etc.).

The redesigned architecture and reasoning

We analyzed the problem and decided to use Kafka’s built-in idempotency mechanism and transaction mode. We also started requesting data in large batches, reducing the frequency of requests. We split the batches into smaller portions and ran them in Kafka in transactions. In case of a failure, we repeated the necessary portion of the batch. Kafka discarded messages that had already been sent on the fly using the idempotency key. To quickly delete old records, we applied partitioning.

Results and performance improvements

As a result, we started fetching more information from the database at a time, sending it in batches to Kafka, and updating the status of the batch in the database with a single transaction. This solved the issue with duplicates. The order of events was unimportant (if it had been, we would have had to change our approach). Partitions helped to quickly delete records, which reduced the number of auto-vacuum runs.

The Future of High-Load Enterprise .NET Development

Which improvements in .NET 10 do you consider most significant for creating highly scalable applications?

- JIT optimization: Faster execution via more aggressive method inlining and de-virtualization.

- New hardware support: Automatic leverage of modern CPU instructions for math-intensive workloads.

- Reduced GC pauses: Improved Garbage Collection through expanded stack allocation for small, short-lived objects.

- Efficient JSON processing: Faster, lower-allocation streaming JSON parsing using PipeReader integration in System.Text.Json.

- Native container publishing: Simplified DevOps with direct, optimized container image generation, resulting in smaller images and faster cold starts.

- Deep OpenTelemetry integration: Enhanced, built-in support for observability to easily monitor distributed microservices.

- Optimized EF core 10: Better LINQ query translation for efficient data access under high concurrency.

- Simplified real-time APIs: New WebSocketStream for building high-capacity, low-latency real-time communication applications.

How will NativeAOT change calculations when deploying high-load, cloud-native .NET services?

It fundamentally changes the deployment calculations, making them significantly cheaper, faster to scale, and more resource-efficient. In short, Native AOT moves .NET from a dynamic runtime model to a native, compiled model, which is the gold standard for high-density, pay-per-use cloud environments.

Native AOT changes the deployment calculations, making them significantly cheaper, faster to scale, and more resource-efficient. © Denis Struckachev

What are the two or three most influential industry trends that will fundamentally change the way to build a high-load .NET architecture over the next 3–5 years?

First of all, AI automation. It comes as no surprise, really, but generative AI and machine learning are shifting the core purpose of high-load services from simple data processing to intelligence delivery. We have to optimize new architectures for inference (running models), vector data (semantic search), and workflow orchestration (managing complex AI/human pipelines).

Second, edge computing. Processing is moving closer to the user to meet the demand for ultra-low latency, which in its turn is driven by richer user experiences and real-time IoT/machine-to-machine needs. The architecture is no longer just “cloud-first”; it’s “edge-first.”

* * *

Written by Mary Moore and Denis Strukachev